The Governance Hypercube

Achieving Resilience and Autonomy for Hyper scale startups Front-end solutions

Chapter 1: The Lie We All Believed: The Micro Frontend Complexity Trap

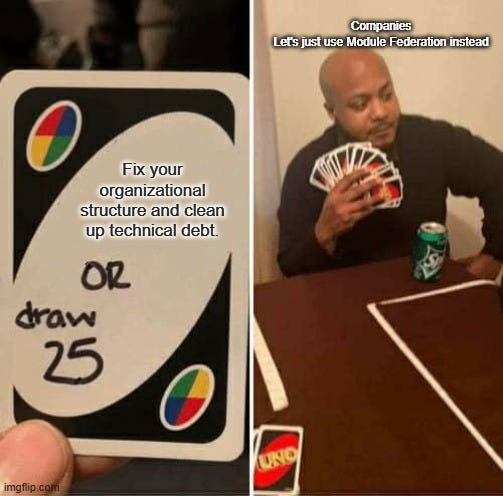

Let’s be honest. Every architect, every engineering leader, and every developer has felt the same dread: the friction of a growing codebase. The fear that our once-agile application is slowing down, held hostage by organizational politics and tangled dependencies.

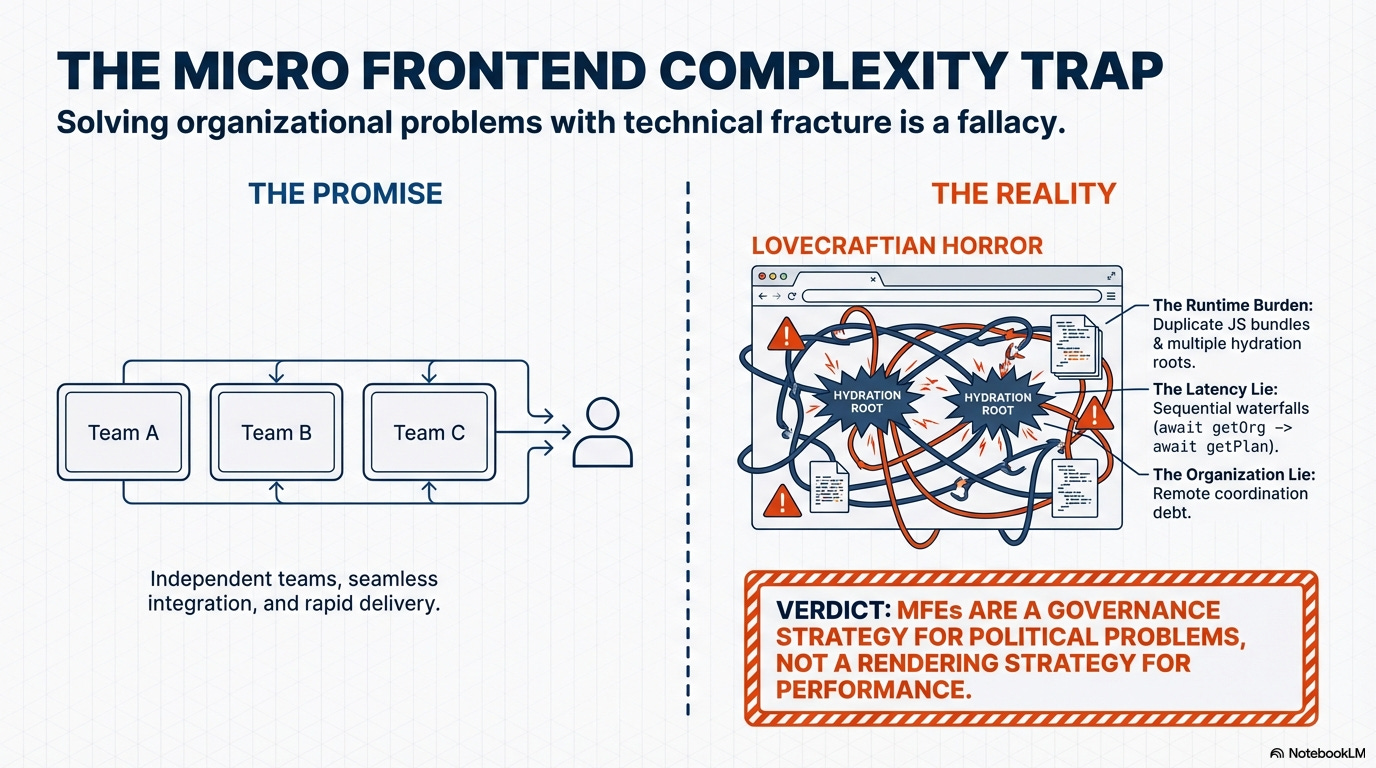

So, we turn to the promised salvation: Micro Frontends (MFEs). The pitch is compelling: “Separate your codebases, empower autonomous teams, and achieve infinite velocity!”

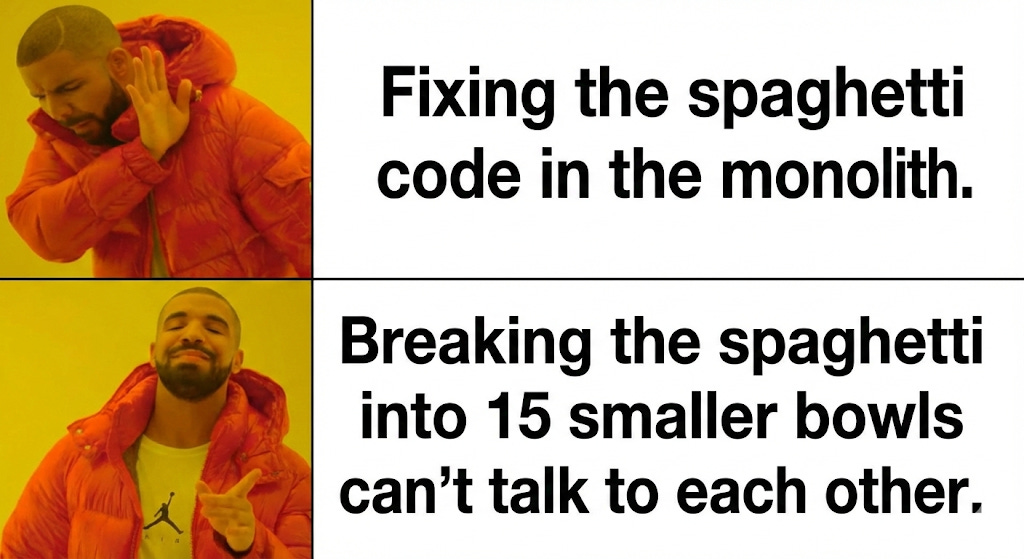

We trade one problem—a monolithic deployment pipeline—for a host of worse ones:

The Runtime Composition Burden: Pages load slower than a dial-up modem as the browser juggles five different JavaScript bundles, multiple hydration roots, and redundant libraries.

The Debugging Nightmare: A bug in the UI is no longer a stack trace; it’s an opaque chain of service-to-service communication errors held together by an inscrutable

webpack.config.jsa Lovecraftian horror few dare to touch.The Hidden Tax: You haven’t decoupled anything; you’ve simply moved the integration pain from the compile step to the end-user’s browser, forcing them to suffer through redundant downloads and agonising hydration.

The architecture diagram looks great on a whiteboard, but the application is held together by organizational hope. The truth is, Micro Frontends are primarily a governance strategy for political problems, not a rendering strategy for performance.

If you are not an organization of 1,000 engineers, you are not solving your problems; you are just giving your slow internal processes a $100 million infrastructure bill. We must stop trying to solve organizational problems with technical fracture.

1. The Problems Micro Frontends Actually Solve

These are legitimate use cases, but they are driven by extreme, non-technical constraints:

Multi-Org / Multi-Vendor UI: You need legal and ownership boundaries where separate companies or large internal organizations contribute to one platform (e.g., a marketplace where different vendors own their component).

Long-Lived Legacy Migrations (The Strangler Pattern): You have a massive legacy application (AngularJS, Backbone, etc.) and must gradually plug in new React/Next pieces without killing the old beast overnight.

Regulatory / Blast Radius Isolation: In highly regulated domains (banking, healthcare), some areas need stricter SLOs or audit trails. Micro frontends create strong deployment boundaries: a bug in the non-critical marketing UI cannot affect the secure payments flow.

Extreme-Scale Autonomy: You have dozens of teams and the single deployment pipeline has genuinely become an unacceptable bottleneck.

Outside these constraints? You’re trading technical simplicity for organizational complexity you likely don’t need yet.

2. The Technical Lie: What It Actually Costs

The promise is better performance and simpler codebases. The reality is usually the exact opposite.

2.1 The Hidden Cost: Performance and Duplication

Micro frontends absolutely do not fix performance. They often cause massive degradation because they introduce:

Duplicate JavaScript: Shipping React, common utility libraries, and potentially the entire design system multiple times for different bundles ( need constant monitoring).

Multiple Hydration Roots: The browser must instantiate and hydrate multiple independent applications on a single page, leading to high CPU usage and slow Time-to-Interactive (TTI).

Extra Network Hops: The shell must fetch configuration and bundles from several remote endpoints during the critical loading path.

2.2 The Latency Lie: The Waterfall is Always On Time

Before performance is even considered, the simplest greatest failure mode of complex architectures is poor data fetching. Pages should be a read-only snapshot, and data fetching should be flat.

Cascading Hell Every sequential call adds latency straight to your Time-to-First-Byte (TTFB).

const user = await getUser();

const org = await getOrg(user.orgId); // Blocks TTFB

const plan = await getPlan(org.planId); // Blocks TTFB again

const features = await getFeatures(plan); // The user is waiting...

Parallel Fetching Fetch everything you can at once, minimizing total blocking time and leveraging the server’s speed.

const [user, products, config] = await Promise.all([

getUser(),

getProducts(),

getConfig(),

]);

We use formal agreements to catch these slow, sequential data relationships early in the feature planning process. This forces teams to fix complicated data logic and weird system dependencies upfront, ensuring the page loads everything in parallel, eliminating the unnecessary waiting game for the user.

Principle: If your architecture diagram is a tree but your data fetching is a linked list of

awaits, your users can hear the latency. Micro frontends distribute this latency; they do not fix it.

2.3 The Organisational Lies: The Illusion of Autonomy

The most common reason companies adopt Micro Frontends has nothing to do with the browser; The reason MFEs feel right is Conway’s Law:

“Organizations which design systems... are constrained to produce designs which are copies of the communication structures of these organizations.”

When Team A and Team B can’t agree on a shared UI pattern, the executive response is often: “Separate the codebases so they can be truly autonomous!”

Separating the technical stack into MFEs is a technical surgery that doesn’t fix the organizational illness. By splitting the repository, you have only fractured the system. You haven’t fixed the communication problem; you’ve just made it more expensive and harder to debug.

Boundary Drift: Without formal Contracts (like the UI Contract or Data Contract), teams still step on each other’s toes. Team A changes a global utility, and Team B’s MFE breaks two weeks later. The boundary is now vague, distributed, and enforced only by manual coordination, not by code.

Context Fragmentation: A new developer is handed a map of five different repositories and told, “Good luck, you’re the glue now.” They spend weeks tracing a single user flow across multiple APIs, repositories, and state management systems.

The Shared Illusion: The teams think they are independent, but they still depend on a shared release cadence, a shared component library, and shared performance targets. The communication debt is now just hidden behind remote coordination and complex deployment orchestration.

Principle: You cannot achieve organizational autonomy by fracturing the technical system. True autonomy requires rigid, compile-time contracts that define the non-negotiable boundaries of communication, allowing teams to own their work within a cohesive technical framework. if not implemented for the right causes MFEs will solve the symptom (slow deployment) by creating a worse disease (distributed complexity).

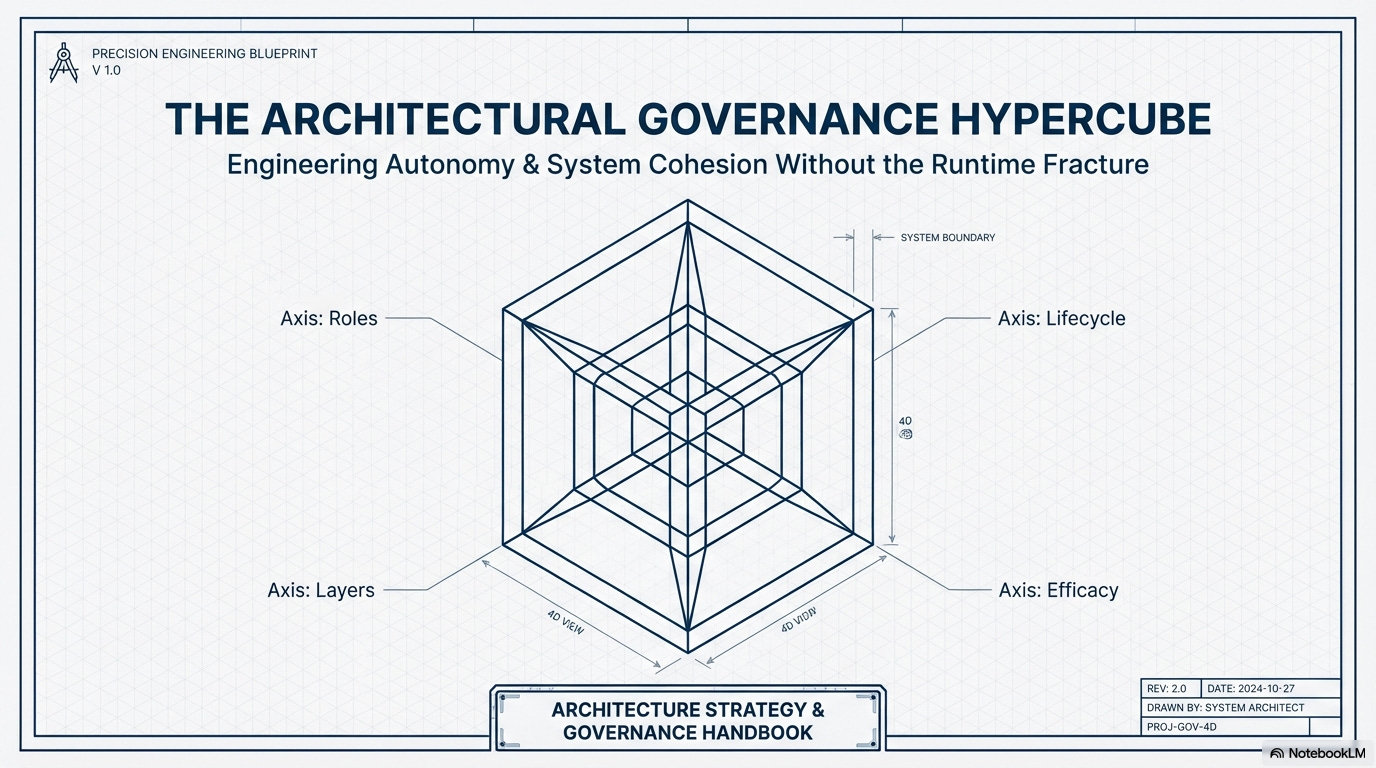

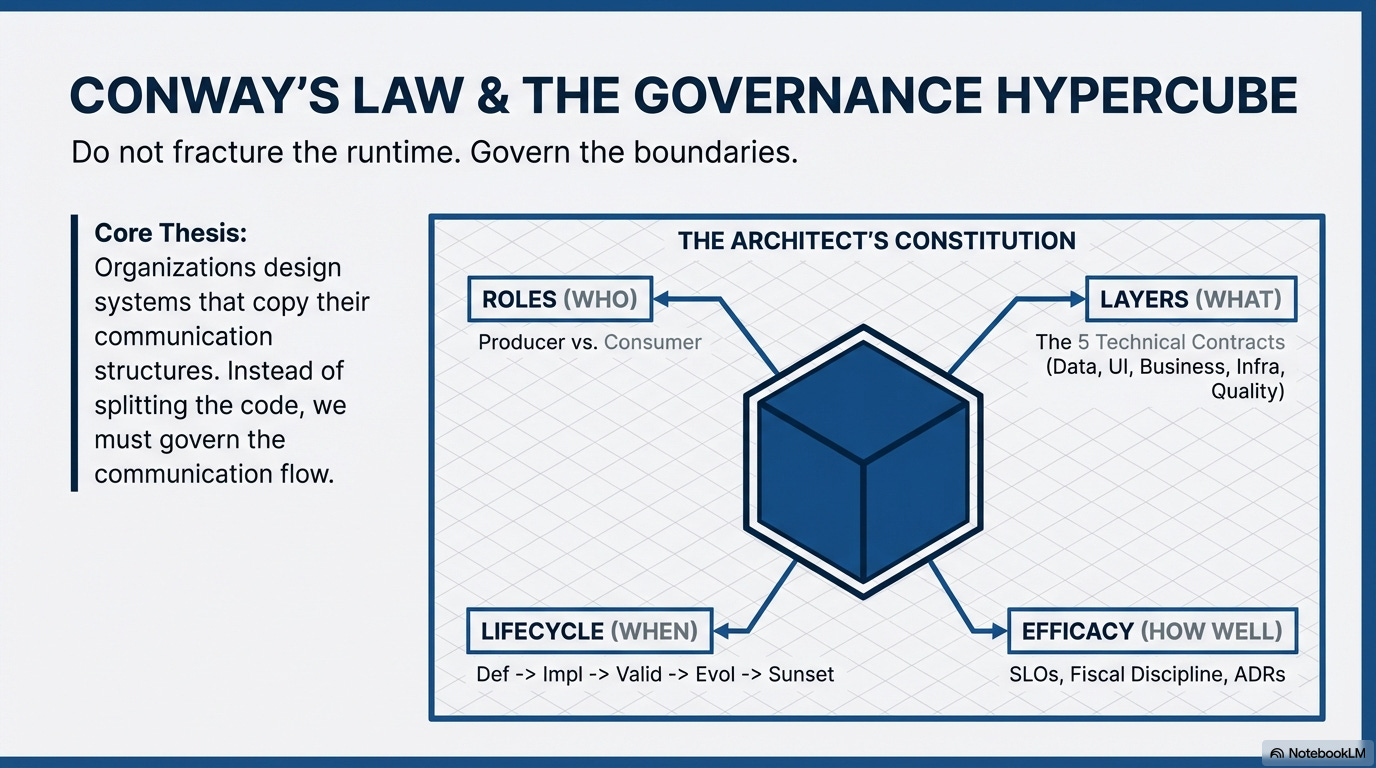

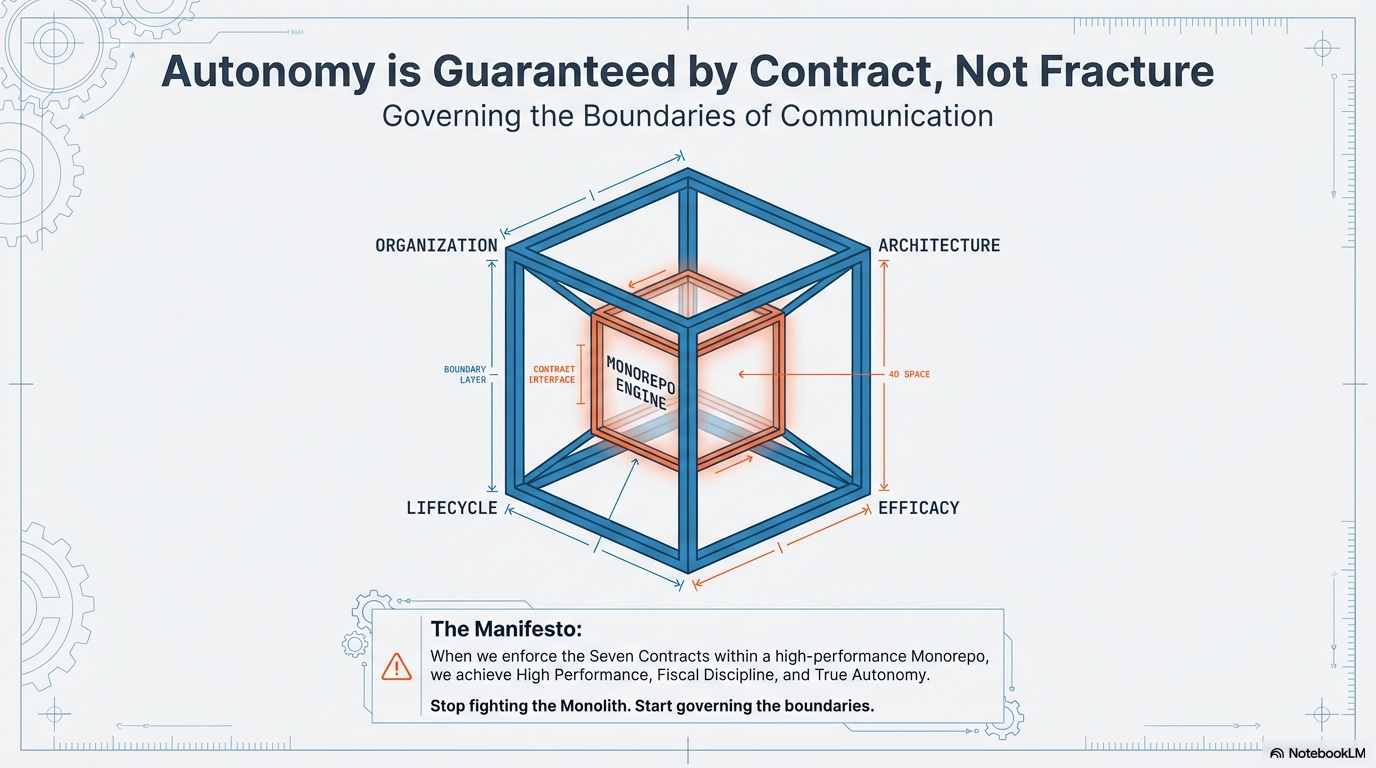

Chapter 2. Conway’s Law and the Architectural Governance Hypercube

As established, Micro Frontends attempt to solve the problems of Conway’s Law by fracturing the technical system when organizational communication fails. This only trades one type of complexity for another.

Instead of fracturing the runtime, we must govern the boundaries of communication itself using compile-time contracts. This is the Architectural Governance Hypercube.

We treat the The Hypercube as the Architect’s Constitution. It manages the communication flow (Conway’s Law) across four essential, interlocking axes, providing the fundamental laws that guarantee the autonomy of our teams without fracturing the runtime.

Axis Governance Framework

Organizational Roles (Who)

Defines clear ownership and the separation of concerns.

Distinguishes between Producers (Owners/Maintainers) and Consumers (Feature Developers).

Architectural Layer (What)

Establishes technical boundaries across the stack.

Covers Data, UI, Business, and Infrastructure Contracts.

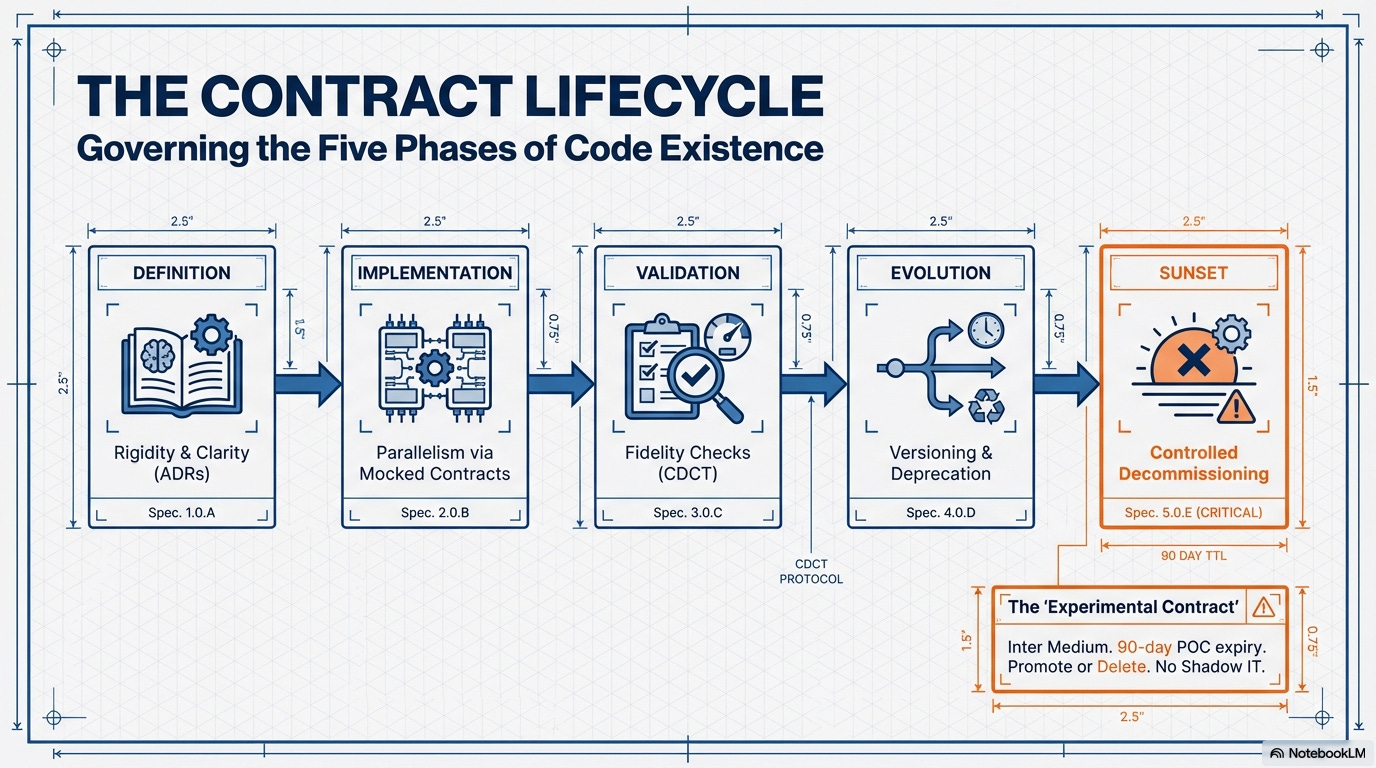

Contract Lifecycle (When/How)

Outlines the specific phases of governance:

Definition

Implementation

Validation

Evolution

Sunset

Runtime Efficacy (How Well)

Defines operational and cultural standards.

Mandates Service Level Objectives (SLOs).

Enforces Fiscal Discipline through Cost-of-Ownership tracking.

Maintains the Knowledge Contract by preserving architectural rationale.

By operating within this hypercube, we achieve organizational autonomy through compile-time tooling and strict contract enforcement in a single, high-performance runtime (the Monorepo).

1. Autonomy by Contract: The Seven Contracts—Technical and Operational Layers

The Hypercube’s structure is realised through Seven essential contracts, clearly divided into the five Technical Layers (Axis 2) and the two primary Operational Layers (Axis 4).

The Five Technical Layers (What owned by Who)

These layers define the concrete, verifiable boundaries between distinct parts of the application and the organization.

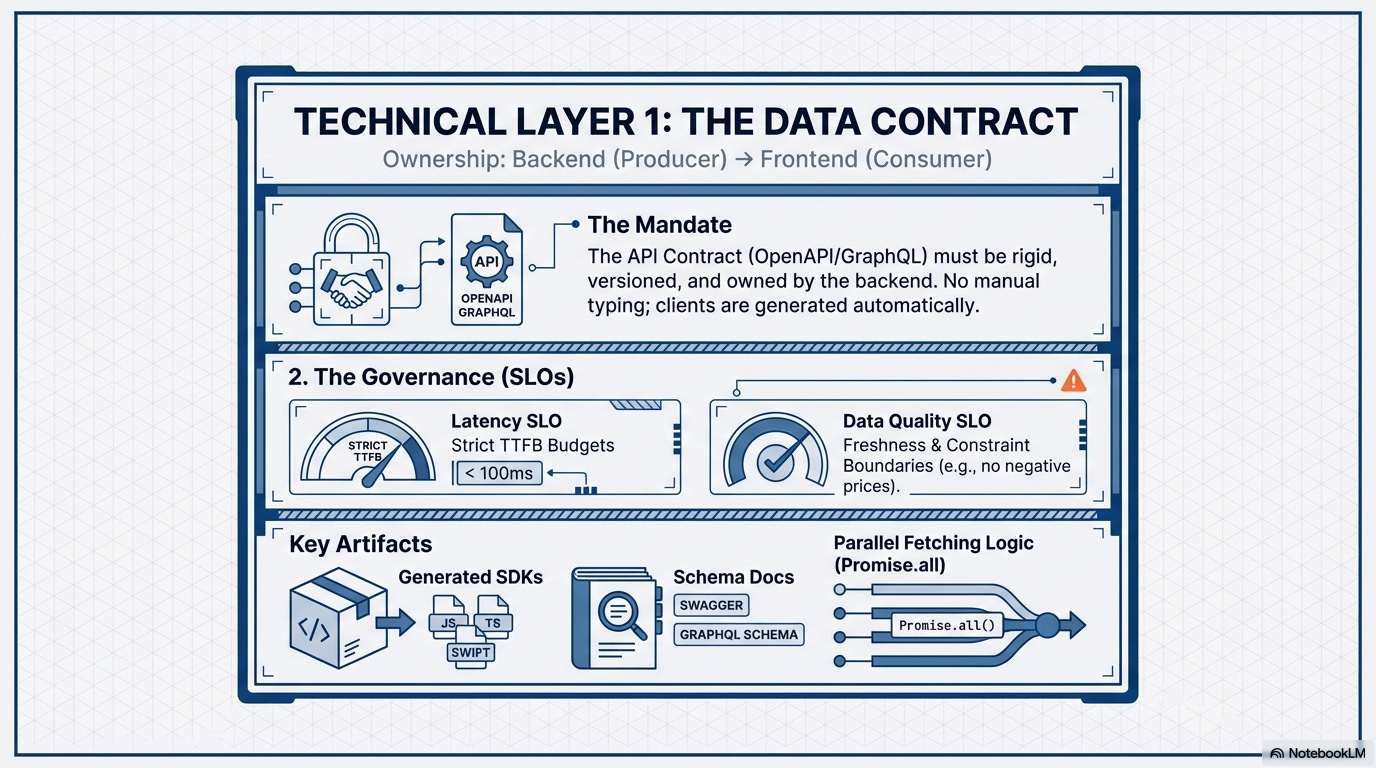

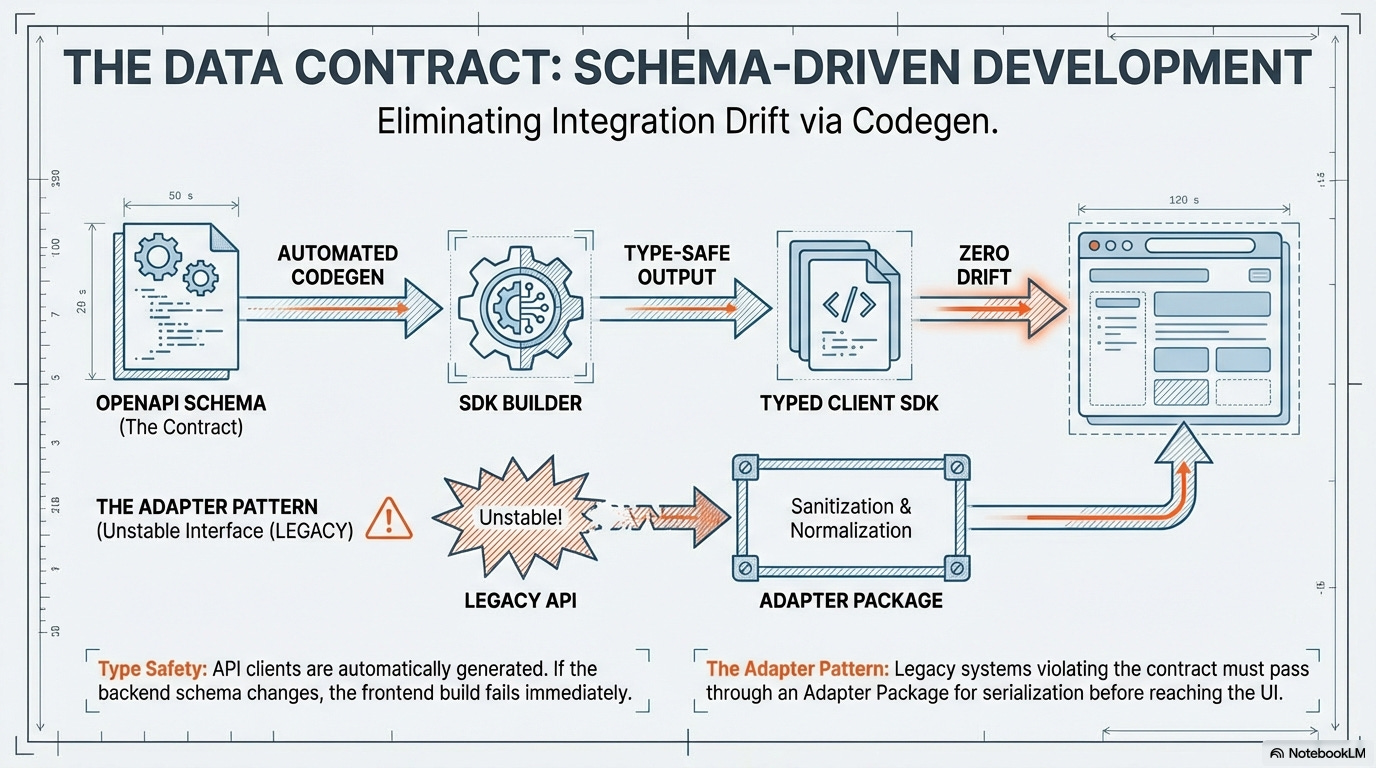

1.1. The Data Contract: Backend ⇒ Frontend (Technical Layer)

The Mandate:

The API Contract (e.g., OpenAPI or GraphQL Schema) must be rigid, versioned, and owned by the backend team. This contract allows the frontend team to mock, type-check, and parallelize data fetches on the server.

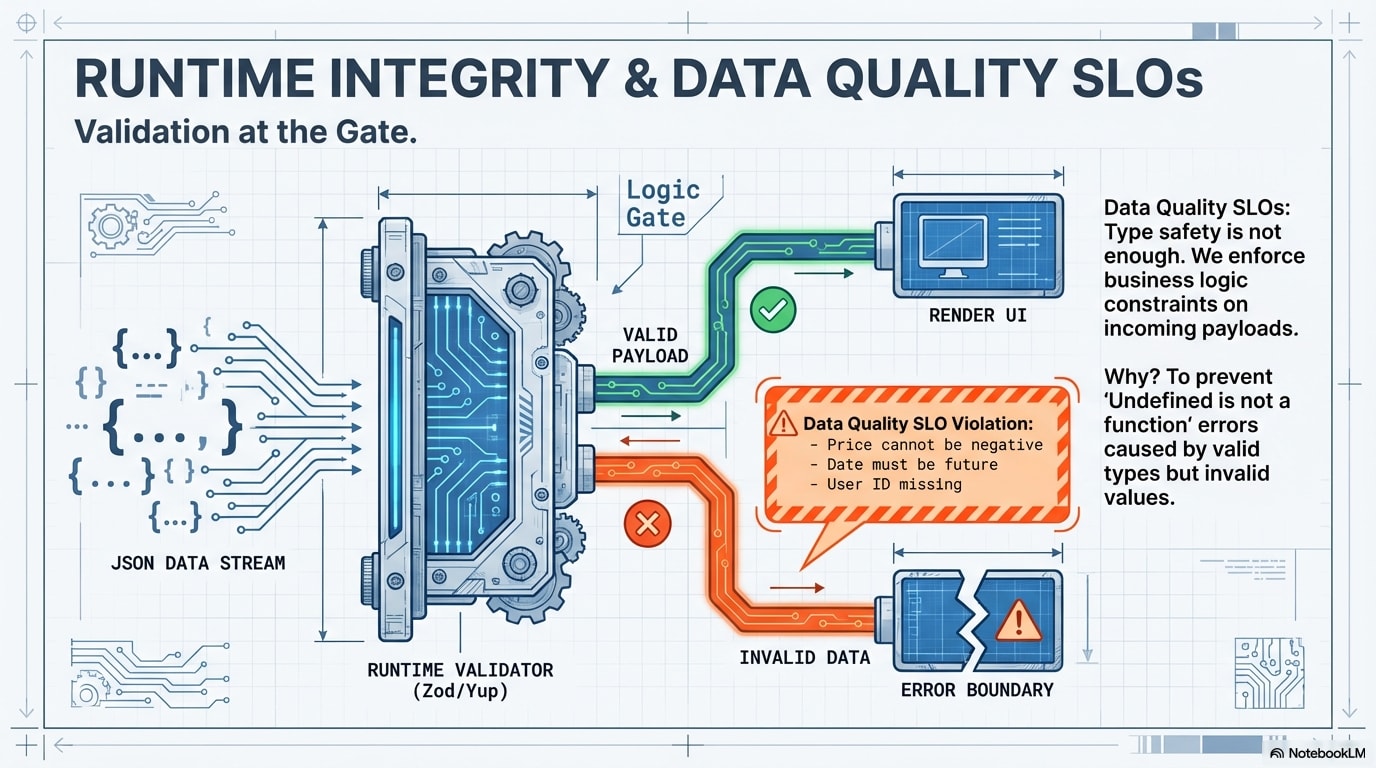

Governed by Runtime Efficacy:

This contract is now coupled with a Latency SLO and Data Quality SLOs, ensuring the final data fetching waterfall remains performant in production and the consumed data adheres to acceptable freshness and constraint boundaries.

Key Artifacts:

OpenAPI/GraphQL/AsyncApi Schema Document, Contract-Generated Client SDKs, Latency Service Level Objective (SLO), Data Quality SLOs

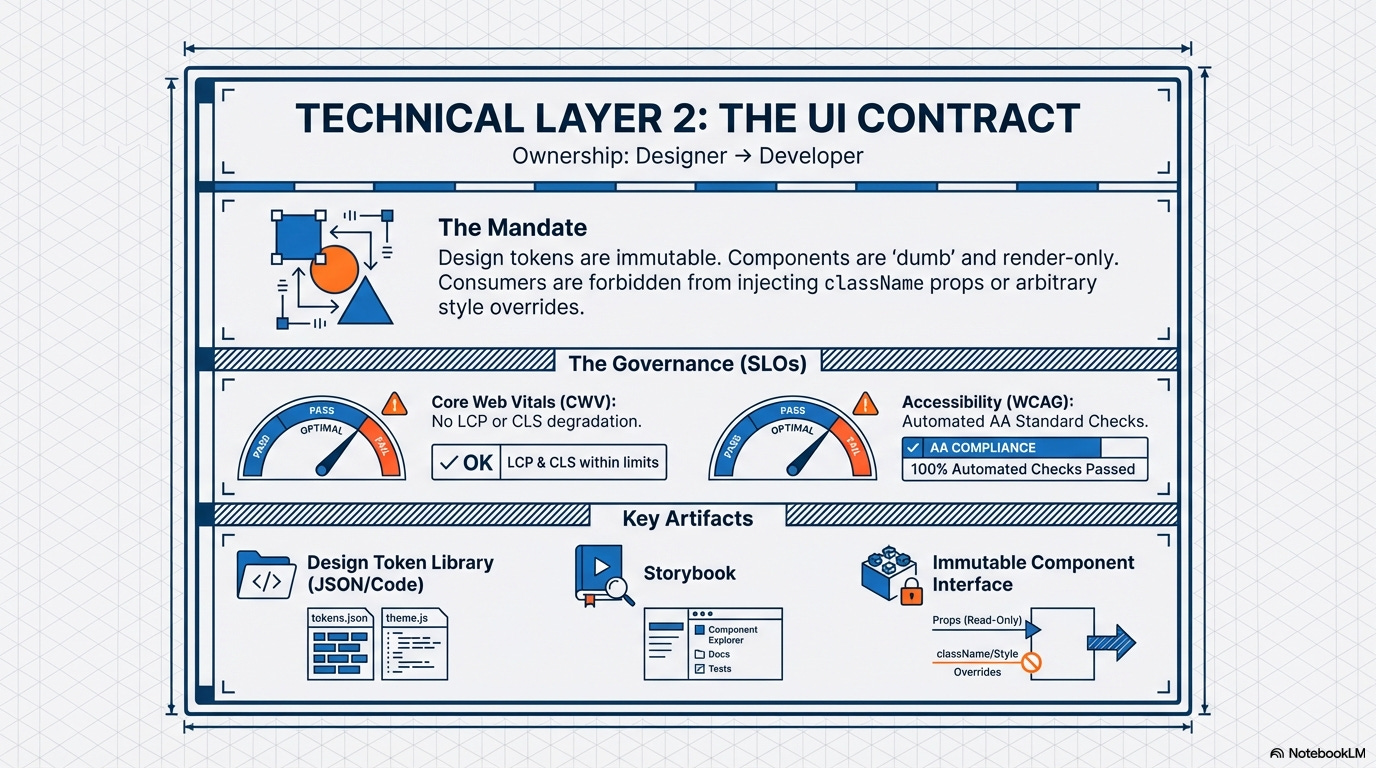

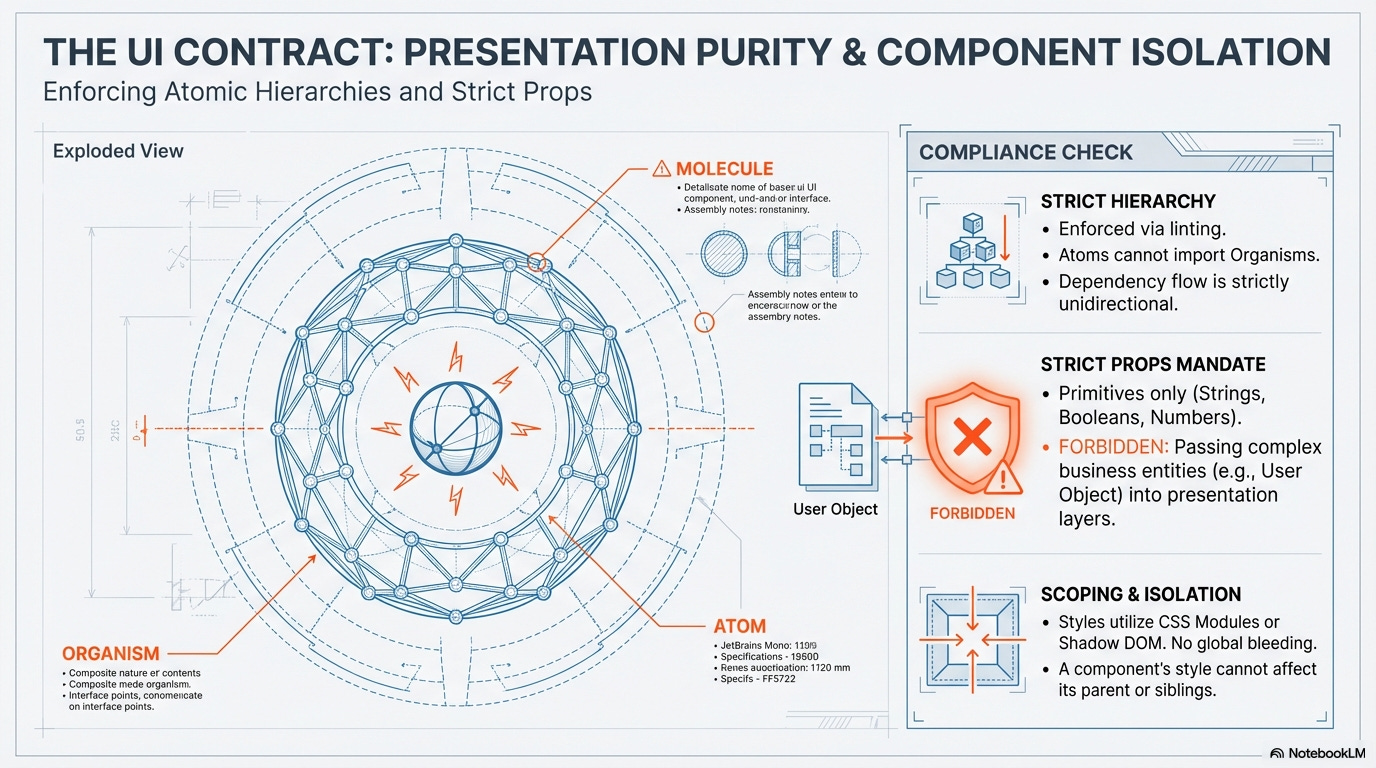

1.2. The UI Contract: Designer ⇒ Developer (Technical Layer)

The Golden Rule:

Design System Components are pure, dumb, and render-only. Application Wrappers are smart, stateful, and think about business logic.

The Mandate:

The Design Tokens are the formal contract. Design is strictly unidirectional; consumers are forbidden from injecting external className props or arbitrary style overrides, treating the component as an immutable black box.

Governed by Runtime Efficacy:

This contract is now coupled with a Core Web Vitals (CWV) SLO and Accessibility SLOs, ensuring that component usage does not degrade end-user rendering performance (LCP, FID, CLS) or violate critical accessibility standards in production.

Key Artifacts:

Design Tokens Library (Code/JSON), Component Storybook/Documentation, Component Interface Definitions (TypeScript/JSDoc), Core Web Vitals (CWV) SLO Document.

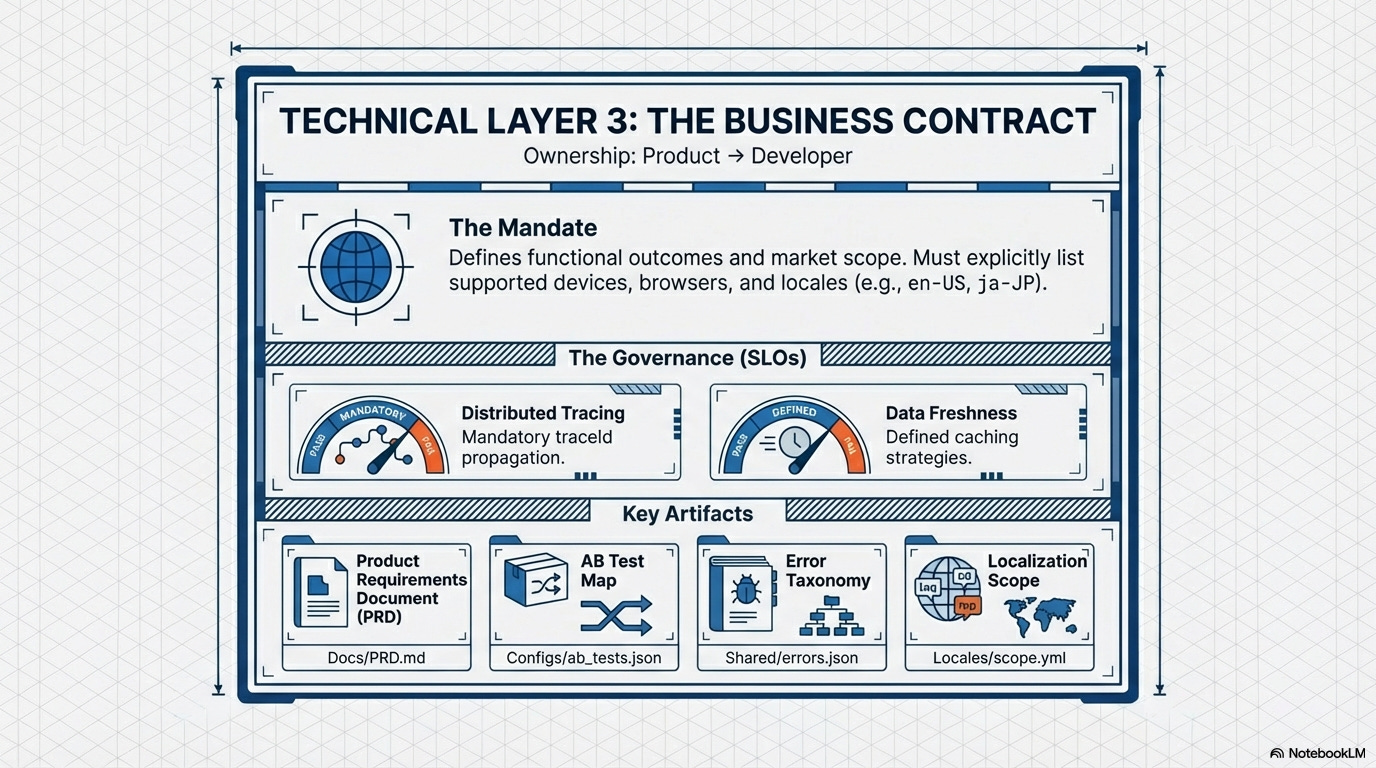

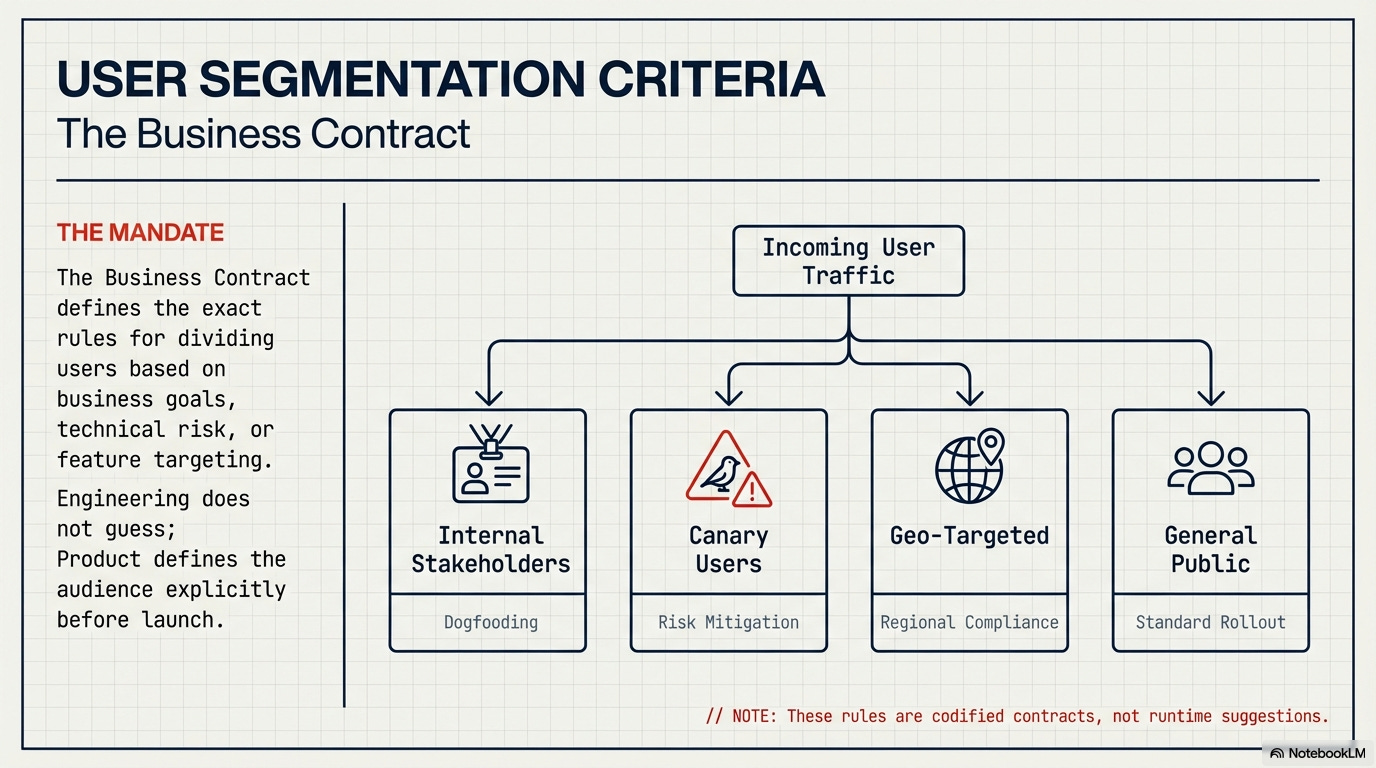

1.3. The Business Contract: Product ⇒Developer (Technical Layer)

The Mandate:

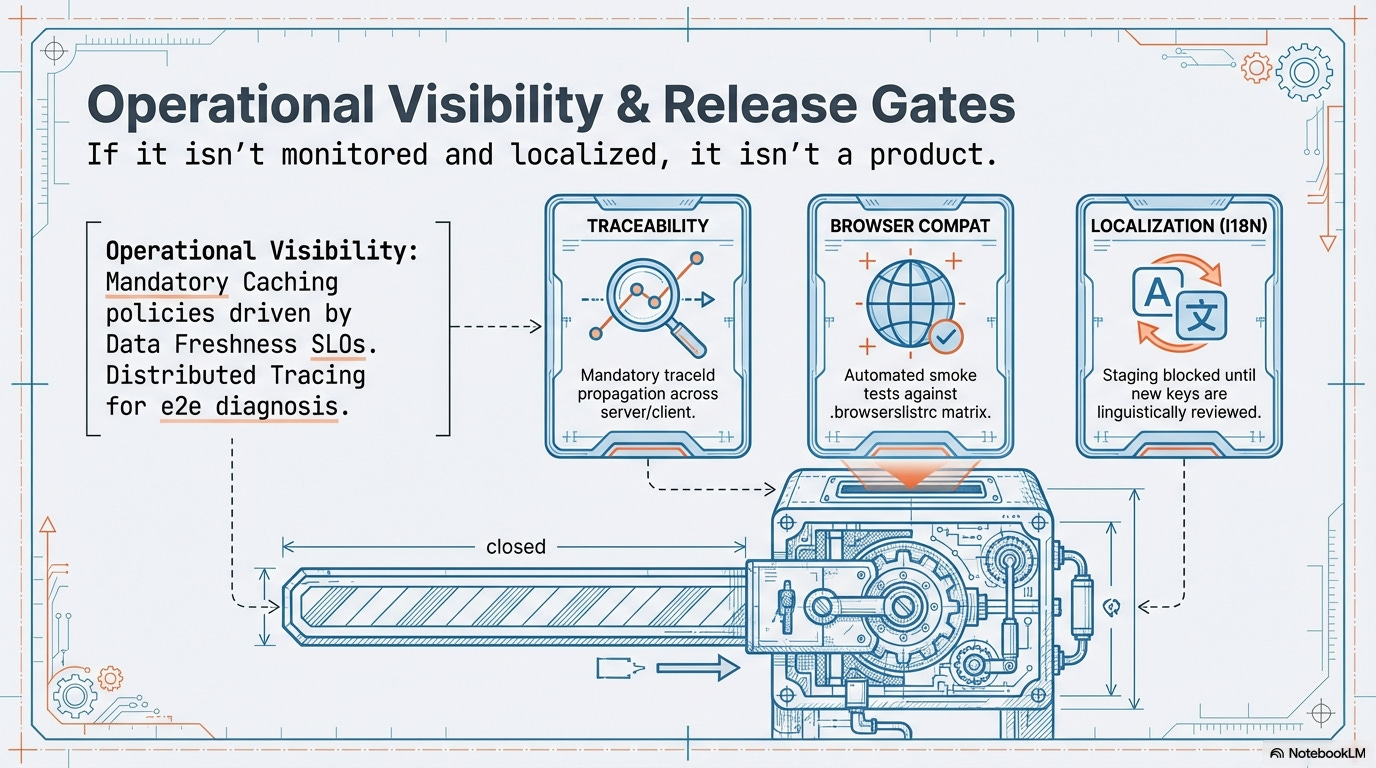

The Product Requirements Document (PRD) and AB Test Map are the formal contract. This governs the functional outcome and the critical tracking event definitions. This contract also explicitly mandates Distributed Tracing to connect user actions across the full server/client flow.

Crucially, the Product team must explicitly define the supported market, which involves three dimensions:

Devices and Browsers: Dictates the Browsers and devices list to prevent over-engineering and ensure development targets the actual user base.

Regions and Languages (Localization Scope): Explicitly lists all supported locale codes (e.g.,

en-US,es-MX,ja-JP) and their corresponding regions. This ensures the application is built with the correct I18N support (date formats, currency, etc.).

Governed by Runtime Efficacy:

This contract is coupled with an Error Handling Contract, Browser Compatibility Check, the Translation Verification Process, and the Data Freshness SLO (which mandates acceptable caching strategies), ensuring that business logic failures, cross-browser compatibility, end-to-end diagnosis, localization quality, and data latency adhere to unified standards.

Key Artifacts:

Product Requirements Document (PRD), AB Test Map, Error Taxonomy and Logging Schema, Distributed Tracing Configuration, Data Freshness SLO.

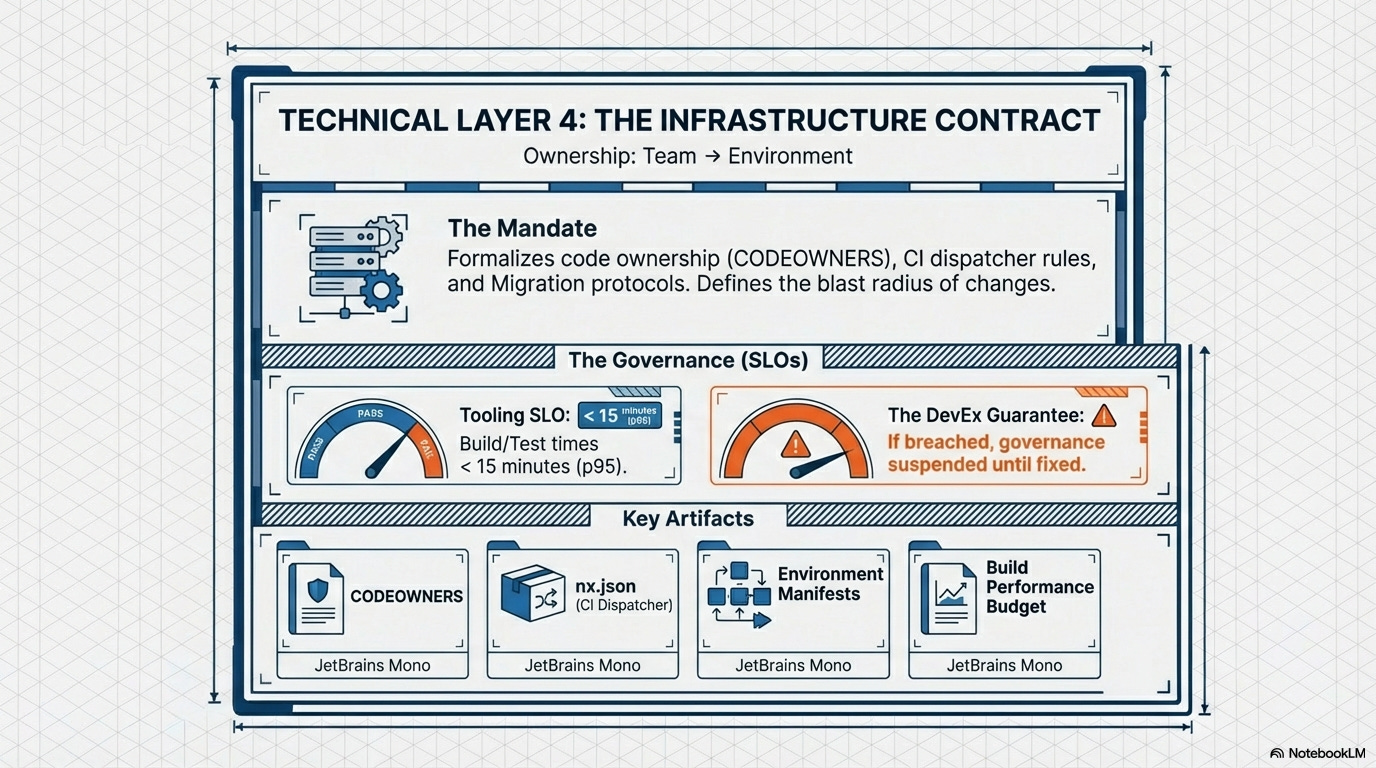

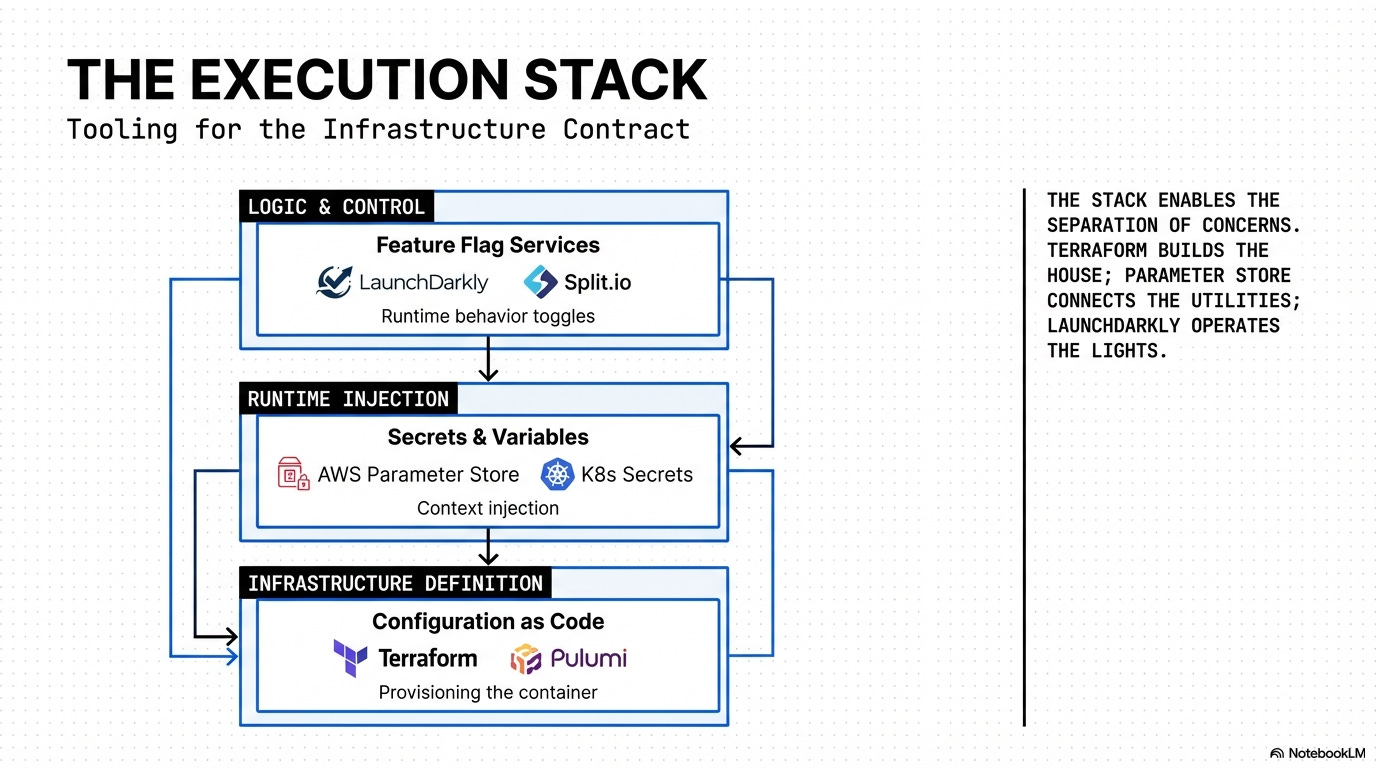

1.4. The Infrastructure Contract: Team ⇒ Environment (Technical Layer)

The Mandate:

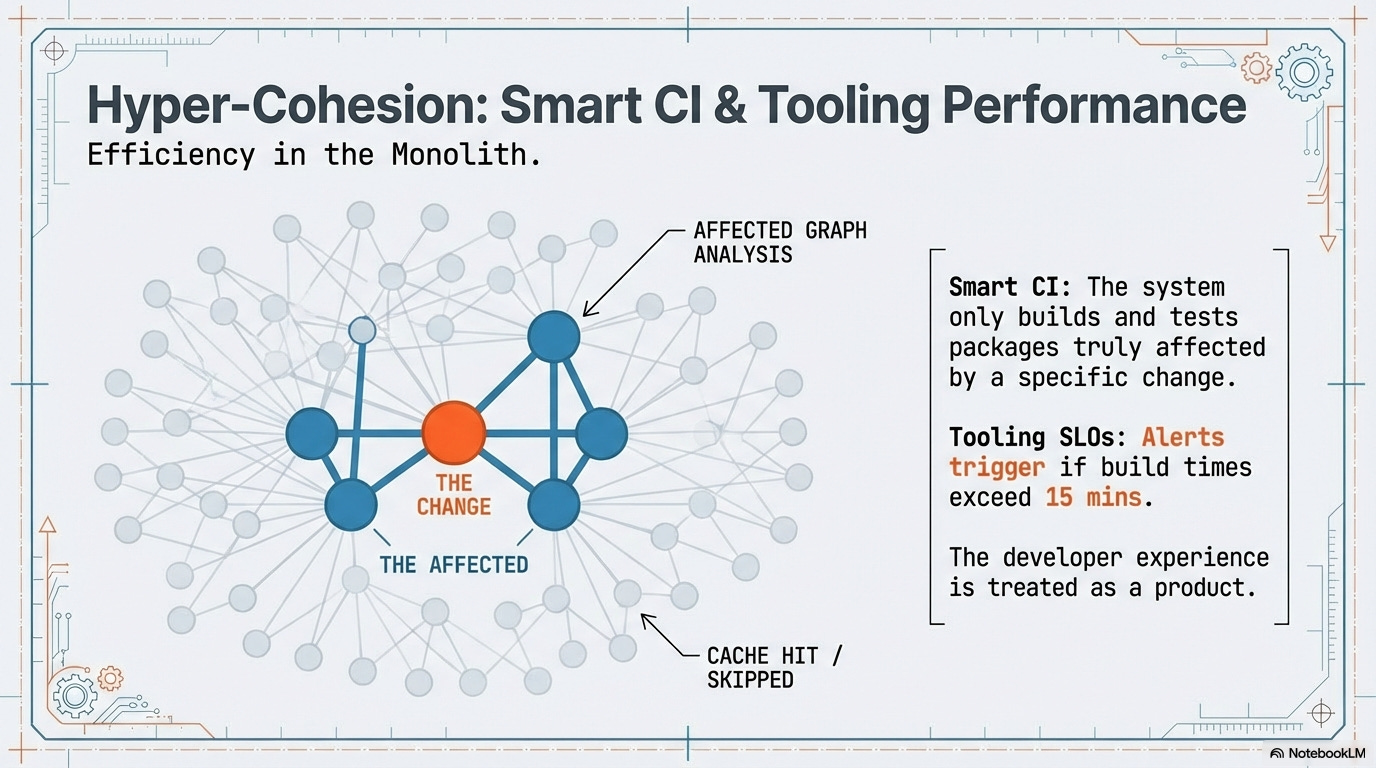

The Infra Contract consists of the Code Ownership file, the CI Dispatcher configuration, and the rules governing large-scale system transitions, including the Migration Contract (Strangler/Re-platforming). This formalizes who can change what, and the blast radius of that change.

Governed by Runtime Efficacy:

This contract is coupled with a Tooling SLO, ensuring that the CI/CD pipeline itself meets performance targets (e.g., maximum 5-minute build time), turning the monorepo tooling into a product with a performance budget.

The DevEx SLO: If the Tooling SLO (build/test time) exceeds 15 minutes (p95) for example, all non-security governance checks are automatically suspended in the CI pipeline until the platform is fixed. This enforces that the platform team must prioritize developer velocity.

Key Artifacts:

Code Owners File (CODEOWNERS), CI Dispatcher Configuration (nx.json or equivalent), Build Performance SLO (Tooling SLO), Environment Configuration Manifests.

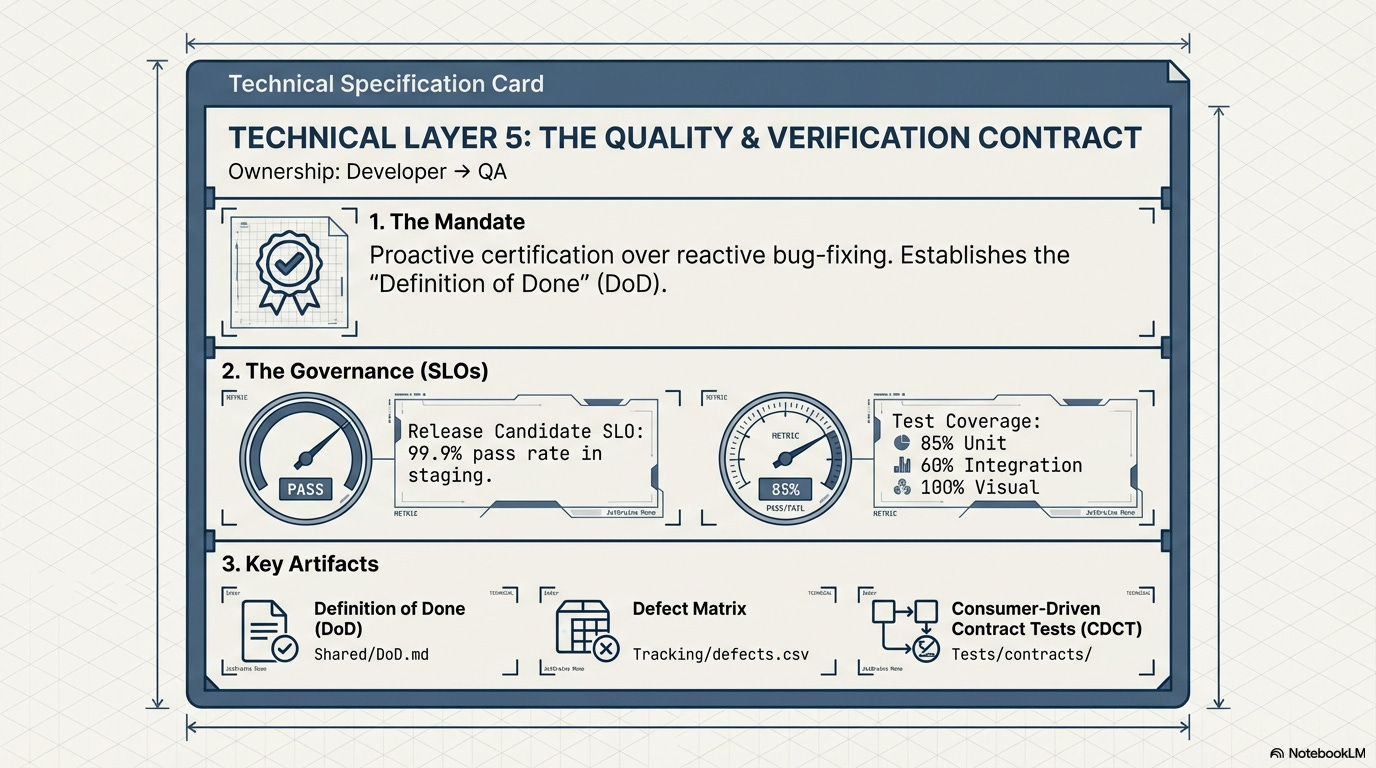

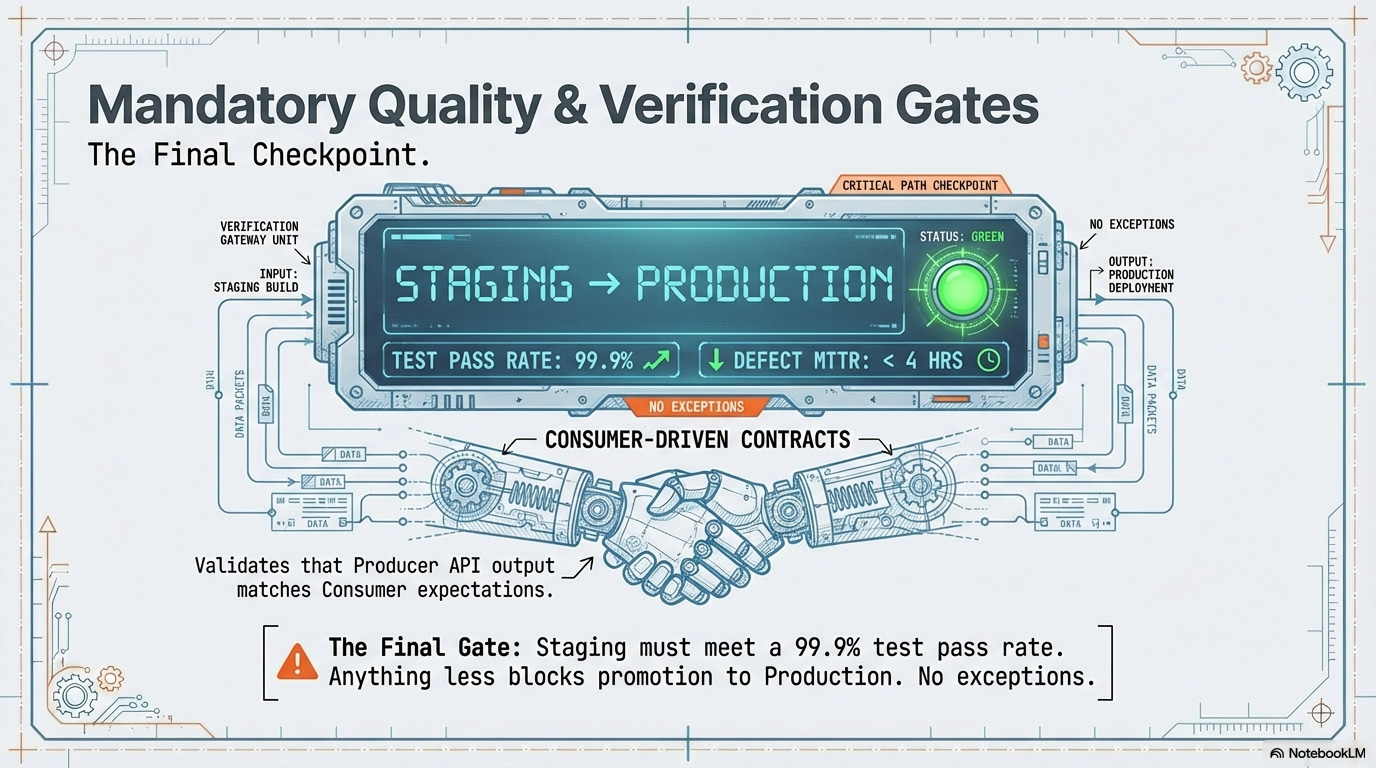

1.5. The Quality & Verification Contract: Developer ⇒ QA (Technical Layer)

The Mandate:

This contract formalizes the verification process, shifting quality from reactive bug-fixing to proactive certification. It defines the mandatory quality gates, inputs, and outputs required before code can be considered ready for release, with a strong focus on the client-side experience.

Governed by Runtime Efficacy:

This contract is coupled with Release Candidate SLOs (e.g., 99.9% test pass rate in staging) and Defect Management SLOs, ensuring that the velocity gained by autonomy is not compromised by unstable releases.

Key Artifacts:

Definition of Done (DoD) Artifact, Mandatory Test Coverage Thresholds (e.g., 85% Unit/60% Integration), E2E Test Strategy Document, and the Defect Prioritization Matrix.

The Two Operational Layers

These layers enforce the cultural and financial sustainability of the architecture, providing the necessary governance for long-term autonomy (Axis 4: Runtime Efficacy).

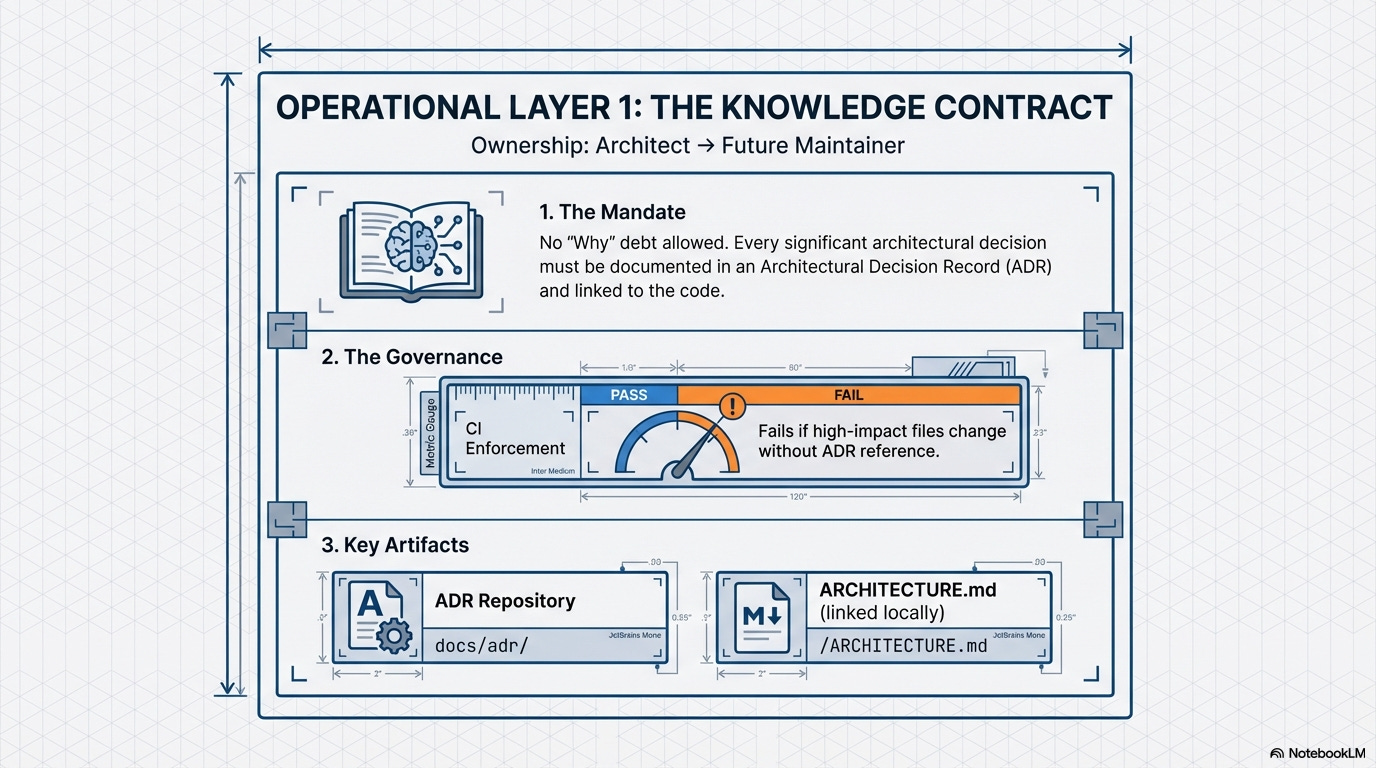

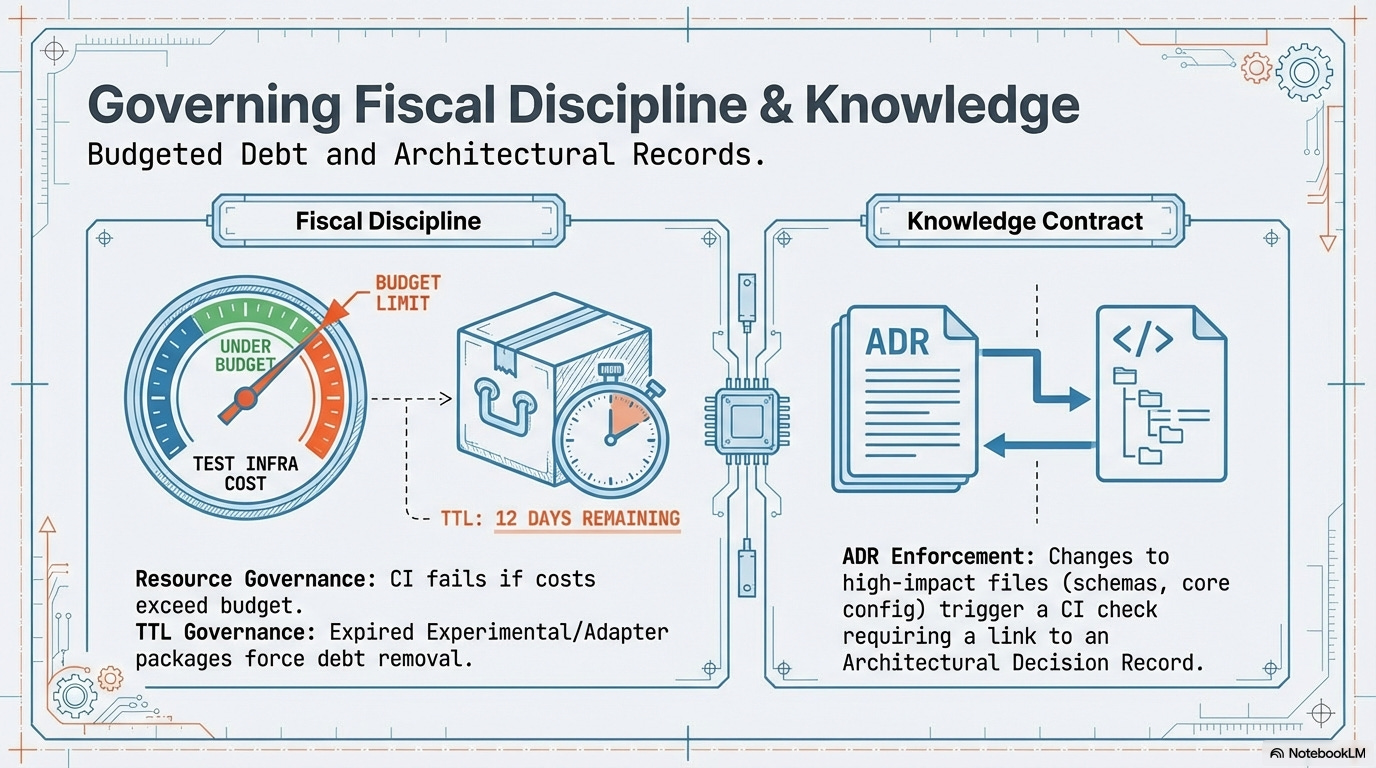

1.6. The Knowledge Contract (Operational Layer: Architectural Rationale)

The Mandate:

The Knowledge Contract requires that the rationale behind every significant architectural decision is documented and permanently linked to the code it affects. This is enforced through Architectural Decision Records (ADRs).

Goal: To prevent the accumulation of “why” debt. If a developer cannot quickly determine why a constraint exists, the constraint is useless and will be violated.

Enforcement: CI tooling mandates that any non-trivial change to an Infrastructure or Business Contract must be accompanied by an update or creation of an ADR, ensuring “the why” is tracked alongside “the what.”

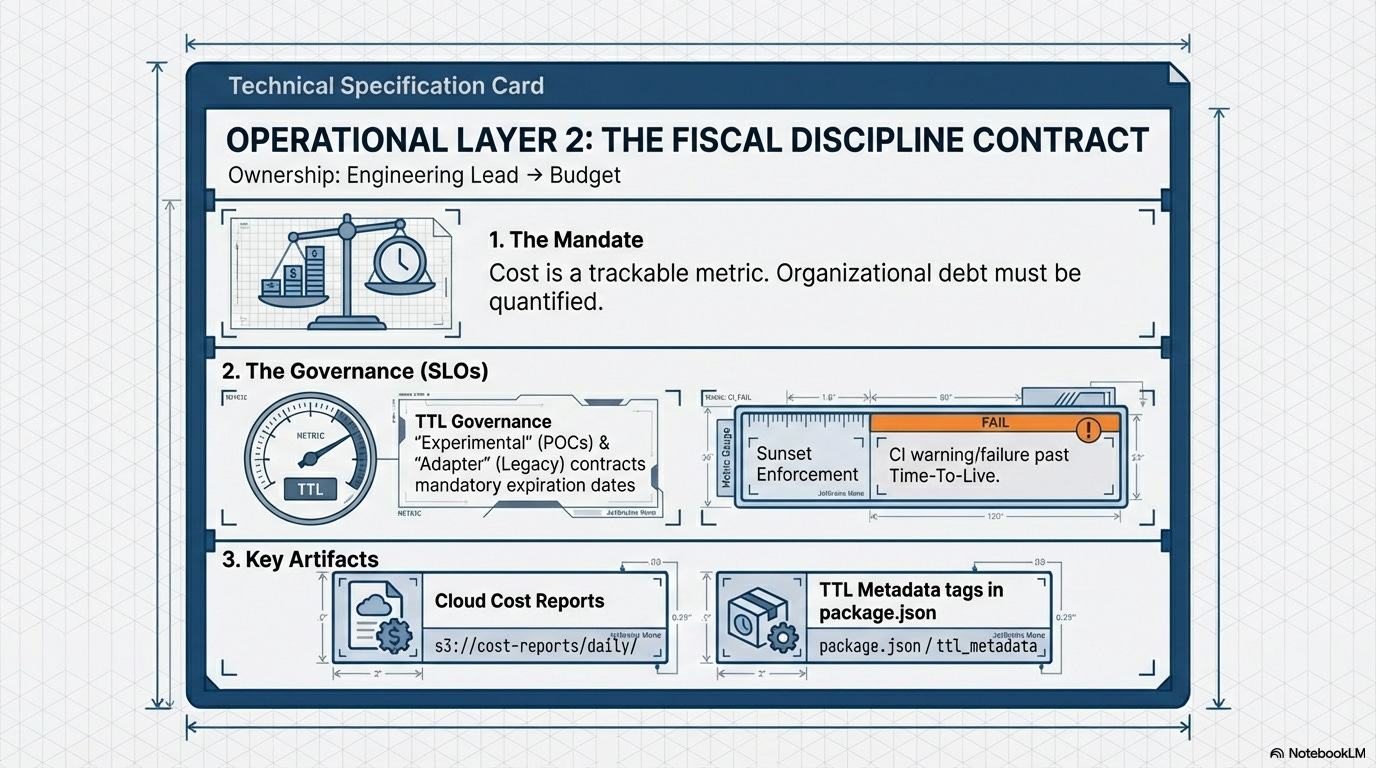

1.7. The Fiscal Discipline Contract (Operational Layer: Cost and Debt Governance)

The Mandate:

The Fiscal Discipline Contract elevates development costs from vague organizational friction to tangible, trackable expenses. It governs two primary cost centers: direct infrastructure consumption and organizational debt.

Resource Governance Check: CI/CD tooling must ensure correct tags are present, we can rely on social pressure via automated weekly “Cloud Cost Reports” visible to VPs/Directors to enforce fiscal responsibility, not by blocking revenue-generating code.

Debt Quantization: The Adapter Contract and its mandatory TTLs (Sunset Phase) are the mechanism for quantifying organizational debt, turning “technical debt” into a scheduled line-item expense that must be addressed or formally budgeted against.

2. Governing the Friction Points: Experimenting, Exceptions and Transitions

The Hypercube integrates contracts to manage the critical friction points where rigidity fails: innovation, legacy integration, and large-scale architectural transitions.

Architectural Contract Governance

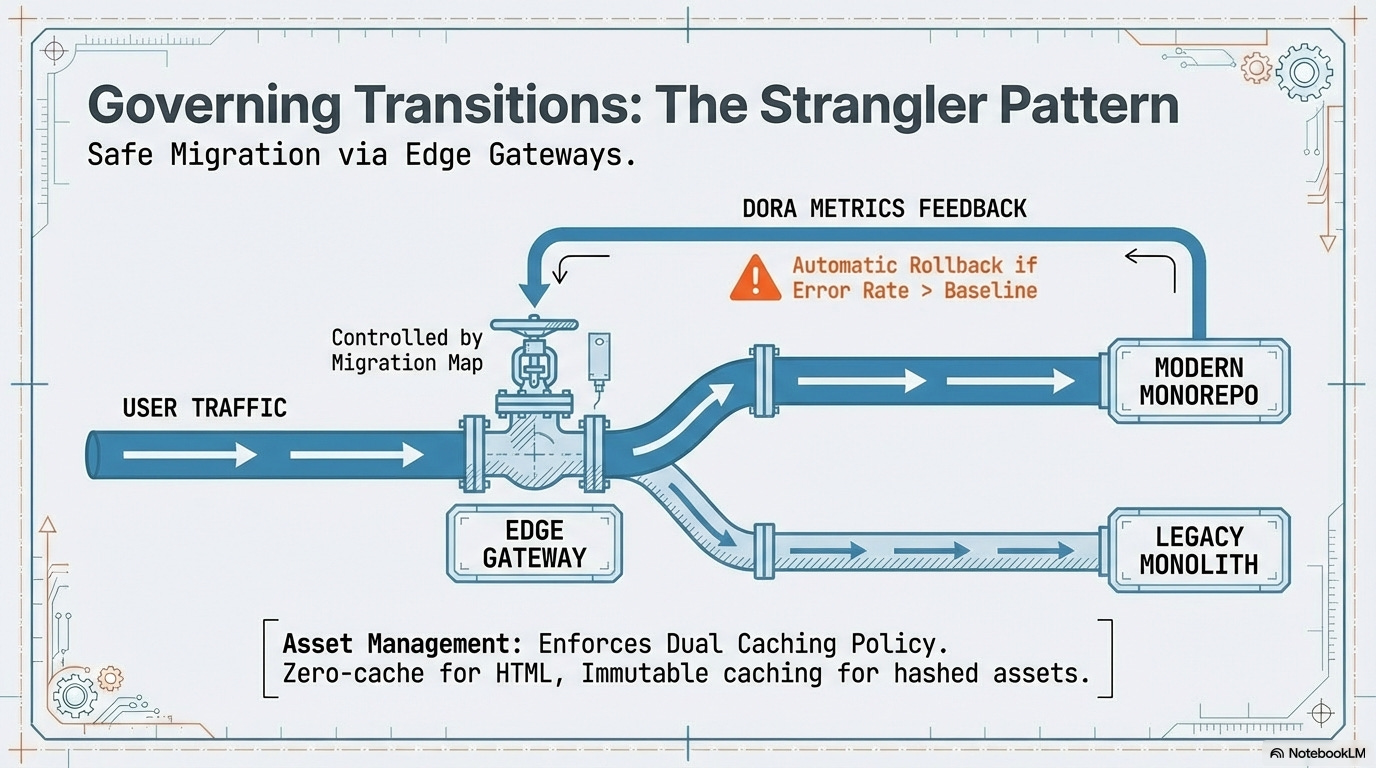

Migration Contract (Strangler/Re-platforming)

Architectural Layer: Infrastructure & Business

Lifecycle Trigger: Phase-Gate SLO Check

Primary Goal: Manages high-risk transitions by mandating Edge Routing, Segmentation, and DORA-driven rollbacks to ensure stability during structural shifts.

Experimental Contract (POCs)

Architectural Layer: UI & Infrastructure

Lifecycle Trigger: TTL Enforcement (90 Days)

Primary Goal: Acts as a formal “governance escape hatch” for rapid prototyping. It prevents Shadow IT and long-term technical debt by ensuring temporary code is either promoted or purged.

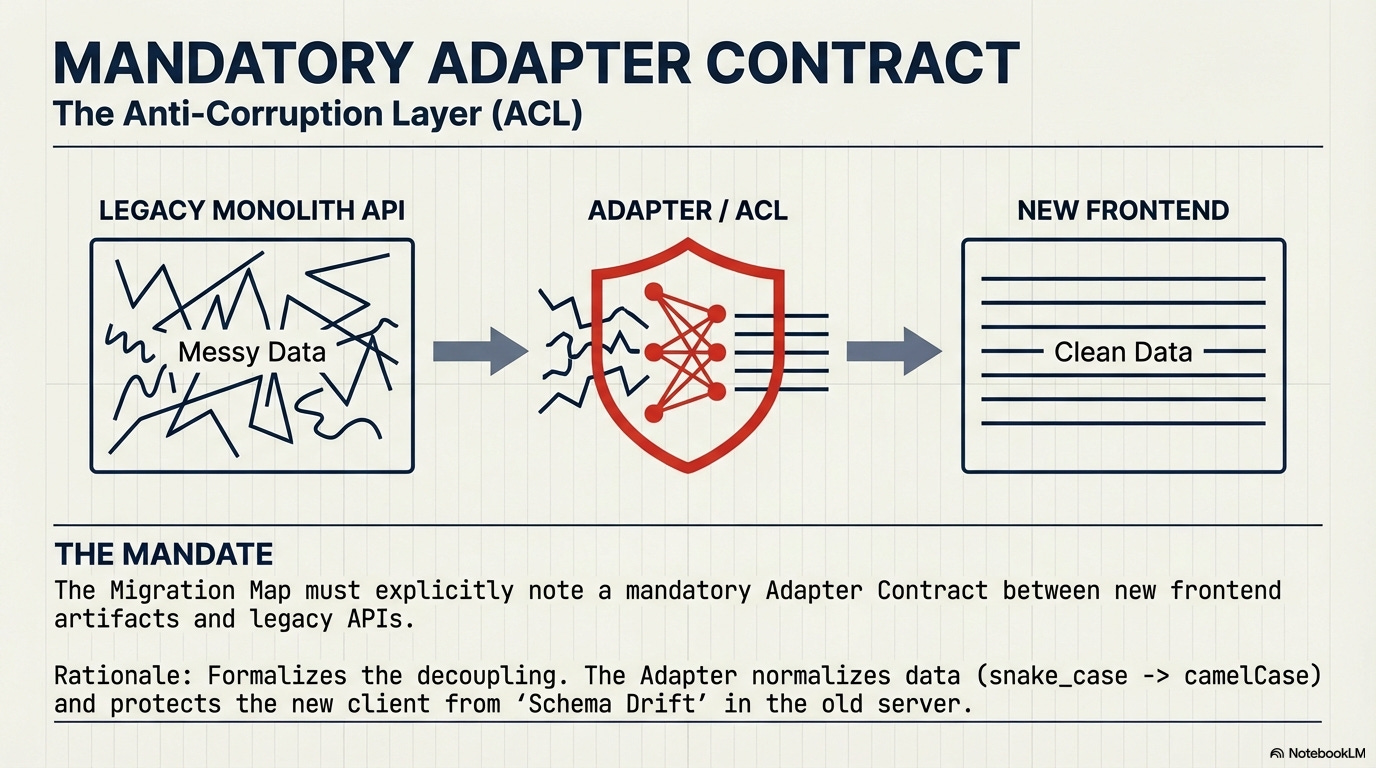

Adapter Contract (Legacy/External)

Architectural Layer: Data & Infrastructure

Lifecycle Trigger: TTL Enforcement (Refactor Date)

Primary Goal: Formally isolates organizational debt. It mandates a compliant translation layer for messy legacy or external APIs to protect the internal ecosystem from “dirty” data or patterns.

Tooling Performance Contract

Architectural Layer: Infrastructure & Runtime Efficacy

Lifecycle Trigger: Build Time Exceeded SLO

Primary Goal: Treats the CI/CD pipeline as a first-class product. It ensures developer velocity is maintained by preventing the Monorepo from being throttled by slow or inefficient tooling.

The Experimental Contract is our most powerful tool for human behavior management. It acknowledges that engineers must be able to explore, but sets a clear, mandatory Sunset Phase date that we will mention shortly. This is how we budget for innovation, not just react to it.

The Migration Contract formalizes the strategy for large-scale, gradual replacement of core systems (like the Strangler Pattern), ensuring that the Infrastructure and Business teams are aligned on roll-out pace, safety gates, and automatic rollbacks based on DORA metrics.

3. The Contract Lifecycle: Governing the Five Phases

The organizational contracts are now managed across five phases, explicitly adding a governed path for decommissioning (Sunset). This lifecycle defines when and how the Hypercube interacts with the system, ensuring that organizational autonomy (Axis 1) never compromises technical integrity (Axis 4: Runtime Efficacy).

3.1 The Lifecycle, Contracts, and Runtime Efficacy Link

Each phase of the Contract Lifecycle is designed to manage risk and enforce discipline across the Technical and Operational Contracts, applying the Runtime Efficacy principles at the most effective time:

Definition Phase (Rigidity and Clarity):

Contracts Involved: Data, UI, Business, Infrastructure, Knowledge (Operational).

Runtime Efficacy Link: The Knowledge Contract (3.6) is enforced here. Any new contract or significant revision must be accompanied by an Architectural Decision Record (ADR), which permanently links the architectural “why” to the contract’s “what.” This prevents future violation of the contract’s constraints due to a lack of rationale, thereby reducing operational friction.

Implementation Phase (Parallelism and Mocking):

Contracts Involved: Data, UI, Infrastructure.

Runtime Efficacy Link: The Tooling SLO (part of the Infrastructure Contract 3.4) ensures that the process of generating client SDKs and running isolated local builds is fast and efficient. This speed is what enables true, high-velocity parallel development, preventing the monorepo itself from becoming a bottleneck.

Validation Phase (Fidelity and Regression):

Contracts Involved: Data, UI, Business, Quality & Verification (Technical).

Runtime Efficacy Link: This is the critical compliance phase enforced by the Quality & Verification Contract (3.5). It mandates Consumer-Driven Contract Testing (CDCT) to confirm that the producer’s implementation (e.g., API response, rendered component) matches the contract definition. This proactive testing minimizes production bugs, directly supporting the Defect Management SLOs and reducing Mean Time to Resolution (MTTR).

Evolution Phase (Versioning and Deprecation):

Contracts Involved: Data, UI, Infrastructure.

Runtime Efficacy Link: When a contract changes (e.g., a new Data Contract version is rolled out), the deployment must be validated against production-level performance standards. This ensures the change does not degrade the user experience. The Latency SLO (3.1) and Core Web Vitals (CWV) SLO (3.2) are the final gates; if the new contract version causes a performance regression, deployment is blocked until the SLO is met.

Sunset Phase (Controlled Decommissioning and Debt Removal):

Contracts Involved: Adapter, Experimental, Fiscal Discipline (Operational).

Runtime Efficacy Link: The Fiscal Discipline Contract (3.7) provides the hard enforcement via TTL Governance. When a temporary artifact (like an Experimental POC or a legacy Adapter layer) passes its Time-To-Live expiration date, the CI build is programmed to Warn the team. This mechanism formally quantifies organizational debt as a fixed expense of developer time that must be budgeted and addressed, preventing debt from silently degrading long-term velocity and maintainability.

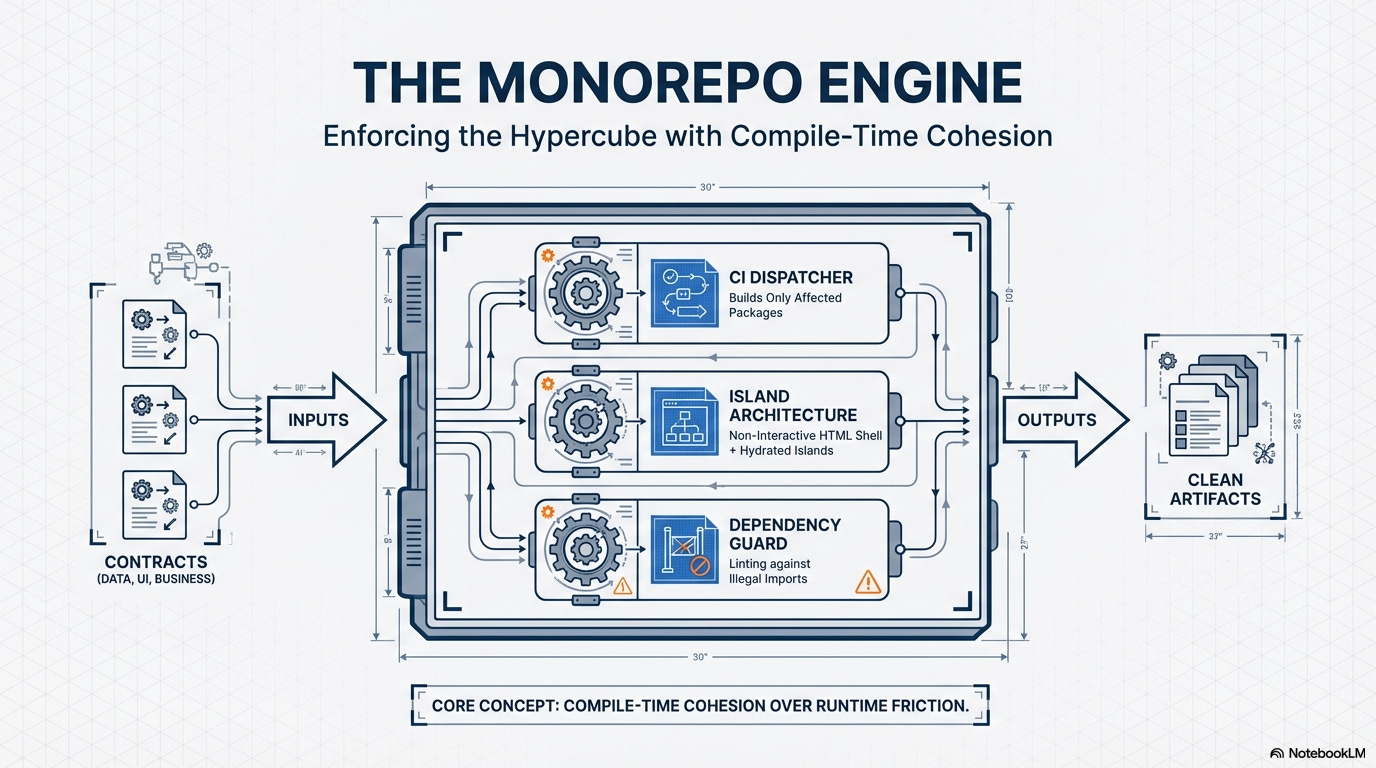

Chapter 3. The Monorepo Engine: Enforcing the Hypercube’s Contracts

The Monorepo, powered by modern rendering patterns, is the engine that enforces the Architectural Governance Hypercube. It achieves autonomy by favorings compile-time cohesion and strictly separating concerns at the code level. It is the physical vessel that carries the 7 contracts and the five phases, allowing us to enforce architectural discipline with zero runtime friction.

“Before we dive into the technicalities, let’s acknowledge the simple truth of the frontend world: every architectural paradigm—from Micro Frontends to Islands to Single Page Applications is ultimately built upon the humble foundation of pages and routes. For all the complex diagrams and deep theory, the fundamental user interaction remains: the user hits a URL, and a page renders. That’s it. Our job is simply to make that transition lightning fast and governance-compliant.”

1. The UI Contract (Presentation Purity and Isolation)

The UI Contract guarantees visual consistency and component isolation by strictly limiting a component’s ability to affect external elements or inject business logic into its presentation layer.

I. Component Structure & Isolation

Mandatory Atomic Design Principles: All UI development must strictly adhere to Atomic Design principles (Atoms, Molecules, Organisms, Templates, Pages). This establishes a clear, mandatory hierarchy for composition and reusability.

Tooling Example: ESLint Custom Rules that analyze import paths (e.g., prohibiting an Atom from importing an an Organism or a Page).

Strict Prop Type Scrutiny (No Business Props): Presentation components can only accept primitive or presentation-related props (e.g.,

label,count,onClick). Props related to core business entities (e.g., passing a fullCartobject or complex payment data) are forbidden. Data must be adapted (destructured/simplified) by an upstream Container/Template first.Tooling Example: TypeScript with strict mode enforced, coupled with JSDoc/TSDoc linting rules that flag complex, non-presentation interfaces in component definitions.

No Style Override (Isolation): Components must utilize scoping techniques to ensure their styles cannot bleed out or override the styles of the Host or other components. This guarantees true component portability.

Tooling Example: CSS Modules, CSS-in-JS solutions with strong scoping, or frameworks that enforce Shadow DOM boundaries.

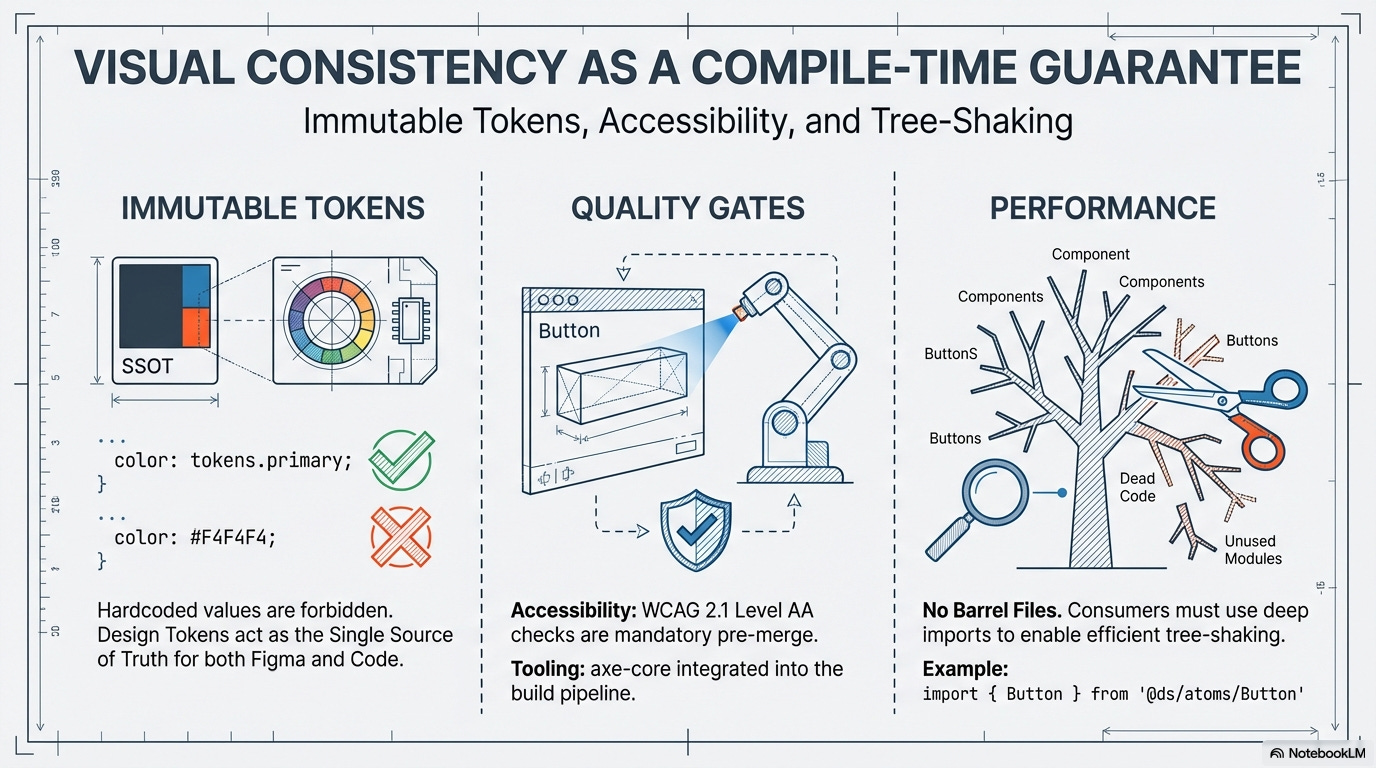

II. Styling and Visual Language

Immutable Tokens & Utility Classes: All styling must use pre-approved, immutable design tokens (colors, spacing, typography) and approved utility classes (e.g., Tailwind CSS). Direct, hardcoded pixel values, magic numbers, or non-tokenized hex codes are strictly forbidden.

Tooling Example: StyleLint configuration that fails the build upon detection of non-tokenized variables or disallowed CSS properties (e.g., direct

font-sizedeclarations).

Design Token Single Source of Truth (SSOT): The design token system must be version-controlled and serve as the single source of truth for both design artifacts (e.g., Figma) and code implementation (e.g., CSS/JS variables).

Tooling Example: Style Dictionary or similar token management tools to generate platform-specific files from a single JSON/YAML source.

III. Quality, Performance, and Governance

Automated Accessibility Testing (WCAG SLO): All new or modified UI components must pass automated accessibility checks against the Design System’s defined WCAG 2.1 Level AA standard threshold. This validates the Accessibility SLO (Service Level Objective) before merging.

Tooling Example: Axe-core integrated into the Unit/Integration test suite (Jest/Cypress/Playwright) to enforce the WCAG threshold as a mandatory quality gate.

Avoid Barrel Files (Performance & Build Speed): The Design System package must not use barrel files (e.g.,

index.tsfiles that re-export everything). Consumers must use deep, specific imports (e.g.,import { Button } from '@ds/lib/components/Button/Button').Rationale: This maximizes the effectiveness of tree-shaking, minimizes the final bundle size for consuming applications, and significantly improves development and build performance by avoiding unnecessary module loading.

Versioning and Change Management (SemVer): The Design System package must adhere to Semantic Versioning (SemVer). Major, minor, and patch releases must be clearly documented, and breaking changes(Major version bumps) require an explicit migration guide and impact analysis.

Tooling Example: Release tooling that enforces SemVer compliance and generates changelogs based on commit message structure (e.g., Conventional Commits).

Tree-Shaking Optimization: All component exports must be structured to allow for efficient tree-shaking by consumer applications, ensuring that unused components or styles are automatically eliminated from production bundles. This is critical for application performance.

Tooling Example: Webpack/Rollup configuration and package export structure that leverages ES modules (

import/export).

Documentation as Code (DAC): Component documentation (Props, Examples, Usage Guidelines, Accessibility Notes) must be generated directly from the source code (e.g., TypeScript interfaces and TSDoc/JSDoc comments) to ensure documentation never drifts from implementation.

Tooling Example: Storybook with Doc Blocks or similar tools that automatically consume TypeScript definitions.

2. The Data Contract (Schema and Type Safety)

The Data Contract mandates strongly typed and validated data exchange, eliminating manual API integration effort and the risk of runtime type errors.

Contract-Generated API Clients: Teams must consume APIs using clients automatically generated from the formal OpenAPI or GraphQL Schema. This guarantees type-safety at compile-time and eliminates drift between the server and the client.

Tooling Example: OpenAPI Generator (for REST) or GraphQL Codegen (for GraphQL) integrated into the monorepo’s build step.

The Adapter Contract Flow: Any interaction with an external or legacy system that violates the Data Contract (e.g., using snake_case, incorrect date formats) must be wrapped within a designated Adapter Package that handles serialization, deserialization, and validation.

Tooling Example: Monorepo Enforcement via dependency-graph analysis (e.g., using

nx affected) to ensure that calls to legacy service domains are only routed through the official adapter-package.

Mandatory Runtime Validation & Data Quality Checks: While type safety is enforced at compile-time, all incoming API payloads must undergo runtime validation before use to guarantee data integrity against external changes. This must include Data Quality SLO assertions (e.g., non-negative prices, valid date ranges) to prevent stale or nonsensical data from corrupting the UI.

Tooling Example: Zod or Yup schemas applied immediately upon API response consumption, with custom rules enforcing Data Quality constraints (e.g.,

z.number().min(0, "Price cannot be negative")).

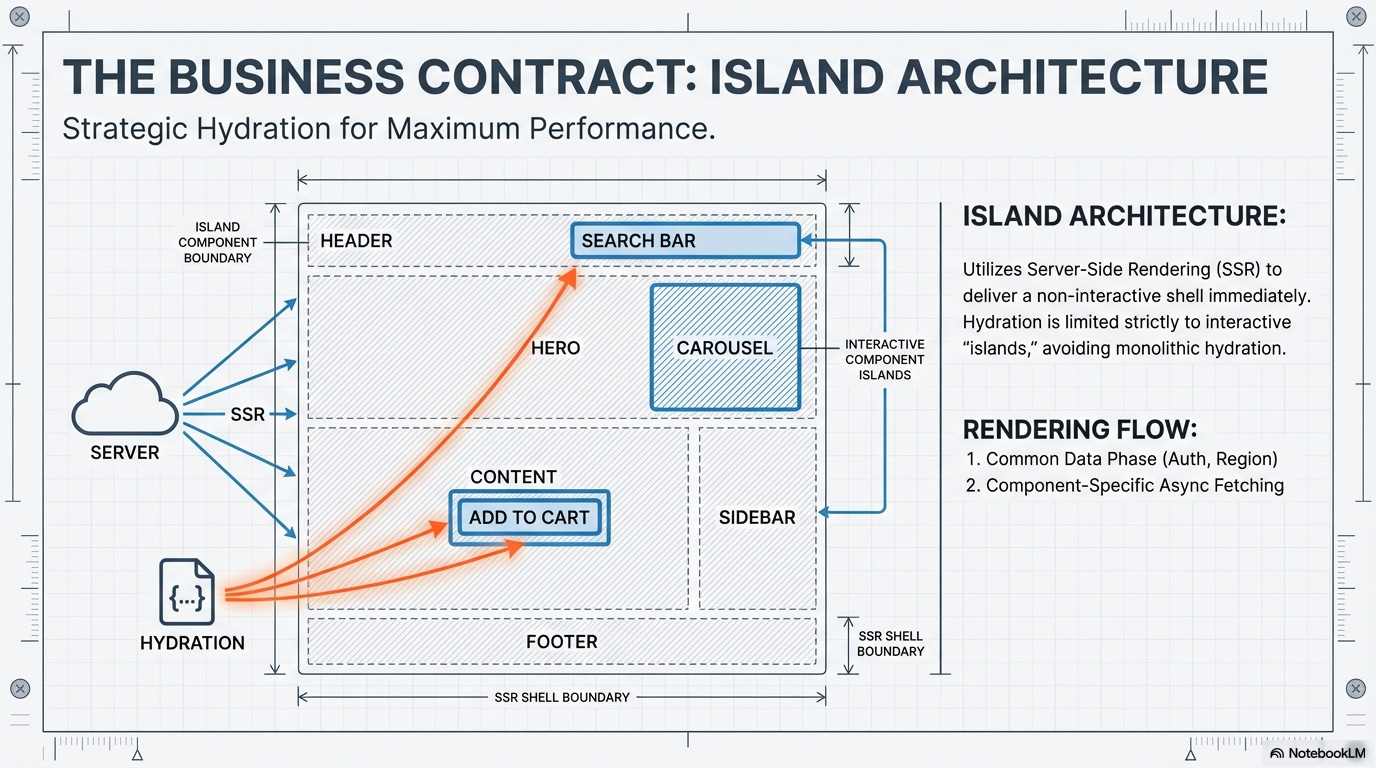

3. The Business Contract

The Business Contract governs where and how critical logic is executed, focusing on performance, cost efficiency, and operational excellence through robust monitoring.

1. Architecture & Hydration Strategy (Island Architecture🏝️

These mandates define the structural approach for delivery, emphasizing server-side performance.

Island Architecture Enforcement: The Island Architecture provides technical isolation without the distributed overhead, acting as the structural enforcement for the core contracts. This architectural style fundamentally relies on Server-Side Rendering (SSR) or Static Site Generation (SSG) to deliver a full, non-interactive HTML shell quickly. It achieves performance gains by strategically limiting client-side hydration only to specific, highly interactive “islands.”

Initial Single-Island Approach: For governance simplicity, any page relying on classical SSR or SSG is initially considered a single, fully-hydrated client island. Technical debt must be logged to break it down into smaller, truly isolated islands only when performance mandates the decomposition. This prevents premature micro-fragmentation.

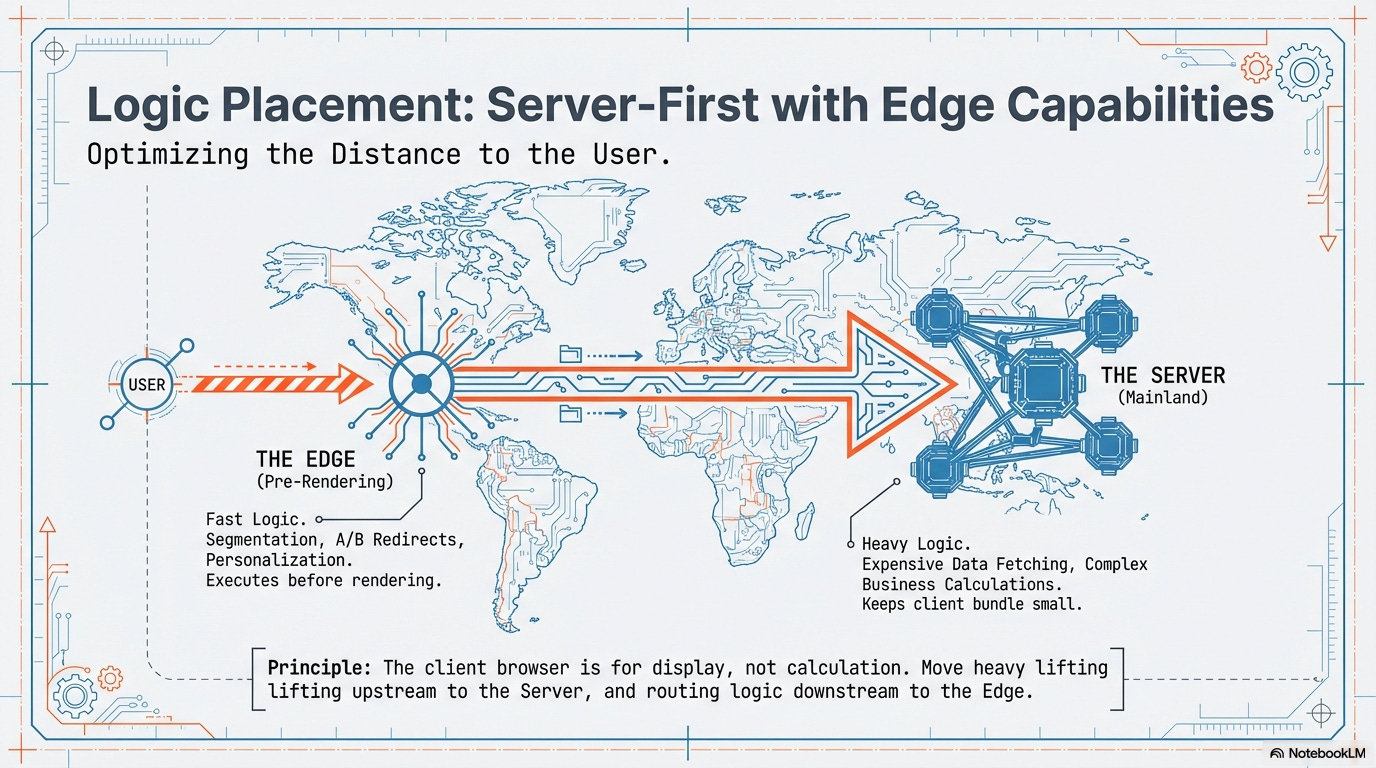

Server/Edge Logic (The Mainland)

All expensive data fetching, user segmentations, and critical business logic must reside on the server or at the edge to maintain minimal client bundles and significantly improve Time-to-First-Byte (TTFB).

Edge Logic (Pre-Rendering):

Manages segmentation and A/B test redirection.

Handles any business flow that must execute before the rendering phase (e.g., URL rewrites, high-speed personalization).

Tooling Example: Vercel/Cloudflare Edge Functions.

Server Logic (Data & Business Core):

Manages the bulk of expensive data fetching and critical, non-time-sensitive business logic.

Tooling Example: Next.js/Remix Server Components.

Rendering Phase Flow

This phase details how server/edge logic integrates with the rendering process, separated into two key data stages.

Common Data Phase (Page Context):

Executed first to gather foundational data required by all subsequent server components on the page.

Retrieves context such as authentication sessions, region, and language-related stuff.

Component-Specific Phase (Asynchronous Data Fetching):

After the common context is established, individual Server Components will call their own asynchronous functions (e.g.,

async/awaitwithin the component) to fetch the data specific to their rendering needs.

2. Operational Visibility & Cost Control 💰

These rules ensure the application is observable, financially responsible, and resilient.

Caching Governance (Cost and Performance): The Business Contract is enforced by mandatory server-side and API caching policies. These policies, driven by the Data Freshness SLO, reduce backend service load (ensuring Fiscal Discipline) and minimize data latency (ensuring Technical Performance).

Tooling Example: CI Script that checks for the presence of appropriate

Cache-Controlheaders on all server routes handling non-user-specific data.

Error Handling & Distributed Tracing Contract: All application code must import and use a standard, centrally defined error reporting utility and classification structure for consistent operational visibility. Furthermore, all components must propagate a mandatory

traceId(e.g., OpenTelemetry context) across all internal and external requests to ensure end-to-end diagnosis is always possible.Tooling Example: Mandatory use of a library like Sentry SDK or Datadog RUM, with linter rules enforcing the use of the centralized utility function (e.g.,

logError(error, context)).

Runtime Observability Validation: The CI pipeline validates that every caught error adheres to the unified logging schema (e.g., must contain

traceId,serviceName, andseverity), guaranteeing the integrity of the Runtime Efficacy Axis observability data.Tooling Example: Custom Pre-Commit Hook that verifies the structure of log objects used in the designated logging utility calls.

3. Release Quality Gates (I18N & Compatibility) 🛡️

These are mandatory checks integrated into the CI/CD pipeline to guarantee localization and browser support readiness.

Mandatory I18N and Browser Compatibility Gates: These checks enforce the Localization Strategy Document and the official Browserslist Configuration (

.browserslistrc).Browser Support Tooling: Compilation tools like Babel and PostCSS must be configured to use the official

.browserslistrcfile. The CI/CD pipeline must execute a mandatory, targeted E2E smoke test suite (using Cypress or Playwright) against a matrix of contracted browsers. Any failure halts the release.

Translations CI Flow (Automated Localization): The localization workflow must be automated and integrated into the CI process.

Extraction: CI tooling automatically scans code for new translation keys (i.e.,

t('new_key')) upon every push to the main branch.Linguistic Review Gate: Deployment to the Staging Environment is blocked until all new keys have been successfully fetched from the Translation Management System (TMS) and marked as linguistically reviewed by the localization team. This prevents placeholder text or machine-translated artifacts from reaching internal users.

2. Hyper-Cohesion: Monorepo Tools and Operational Layer Enforcement

The Monorepo structure enforces the operational and technical layers globally, ensuring that autonomy never compromises overall system health, financial discipline, or knowledge transfer.

To make this easily digestible, I’ll organize these mandates into three clear, action-oriented sections: Operational Governance & Fiscal Discipline, Technical Cohesion & Performance, and Mandatory Quality & Verification Gates.

2.1. Operational Layer Enforcement (Cost and Knowledge)

Resource Governance Check (Fiscal Discipline): CI integrates the Resource Governance Contract by failing the build if the projected cost of the test infrastructure for a specific target (e.g., E2E test suite) exceeds a hard, pre-approved monetary budget.

Tooling: Cloud Cost API Integration (e.g., integrating with AWS Cost Explorer or GCP Billing API), Custom CI Gateway (Checks cost delta before merging).

TTL Governance (Debt Removal & Innovation Control): The Monorepo tooling actively scans the dependency graph for expired Experimental and Adapter packages, triggering the Sunset phase failure.

Enforcement of the Experimental Contract: The Experimental contract package, created for a time-boxed POC, has a mandatory TTL (e.g., 90 days). Once the build system detects the TTL has passed, it warn with build, forcing the team to either formally promote the POC code to a permanent, governed package (Definition Phase) or delete it entirely (Sunset Phase). This prevents Shadow IT from becoming permanent debt.

Tooling: Monorepo Task Runners (Nx/Turborepo) (Used to define and manage package metadata), Custom CI Script (Scans the package dependency graph and warn if TTL metadata is expired).

Knowledge Contract (ADR Enforcement): The CI enforces the linkage between architectural change and documentation for high-impact files.

Tooling: Custom CI Script: Detects modifications to ‘High-Impact Files’ (e.g., core config, schemas). Git Webhook/CI Check: Fails the PR unless it includes a new ADR file OR references an existing ADR number in the commit message. Custom Linter/Husky Hook: Mandates that the affected package’s local

ARCHITECTURE.mdfile is updated with a direct link to the new ADR for local discoverability.

2.2. Technical Cohesion & Performance ⚙️

These rules govern how code is structured, imported, and built within the Monorepo to optimize speed and resource usage.

CI Dispatch Contract (Monorepo-Driven Autonomy): The CI system must be surgically smart; a change to a feature package should only trigger builds and tests for packages truly affected by that change, granting deployment independence to every feature team.

Tooling: Monorepo Task Runners (Nx/Turborepo) with Affected Graph Analysis.

Tooling SLOs Enforcement: CI pipelines automatically monitor and alert when the total build or test time exceeds the contracted threshold, strictly enforcing performance Service Level Objectives (SLOs).

Dependency Cohesion Guard: CI tooling enforces the Dependency Cohesion Contract by failing the build if a package introduces redundant third-party libraries or imports large, monolithic internal packages when a lighter alternative exists. This governs execution cost and code cleanliness.

Tooling: Custom ESLint/TSLint Rules, Dependency Constraint Tools (e.g., Dep-Cruiser/madge).

2.3. Mandatory Quality & Verification Gates 🛡️

These mandates define the necessary testing tiers and compliance standards required for merging and release.

CI Pipeline Integration (Quality & Verification): The Continuous Integration (CI) pipeline must integrate specialized tooling to enforce all aspects of the Quality & Verification contract, including the generation and validation of required coverage reports and visual snapshots.

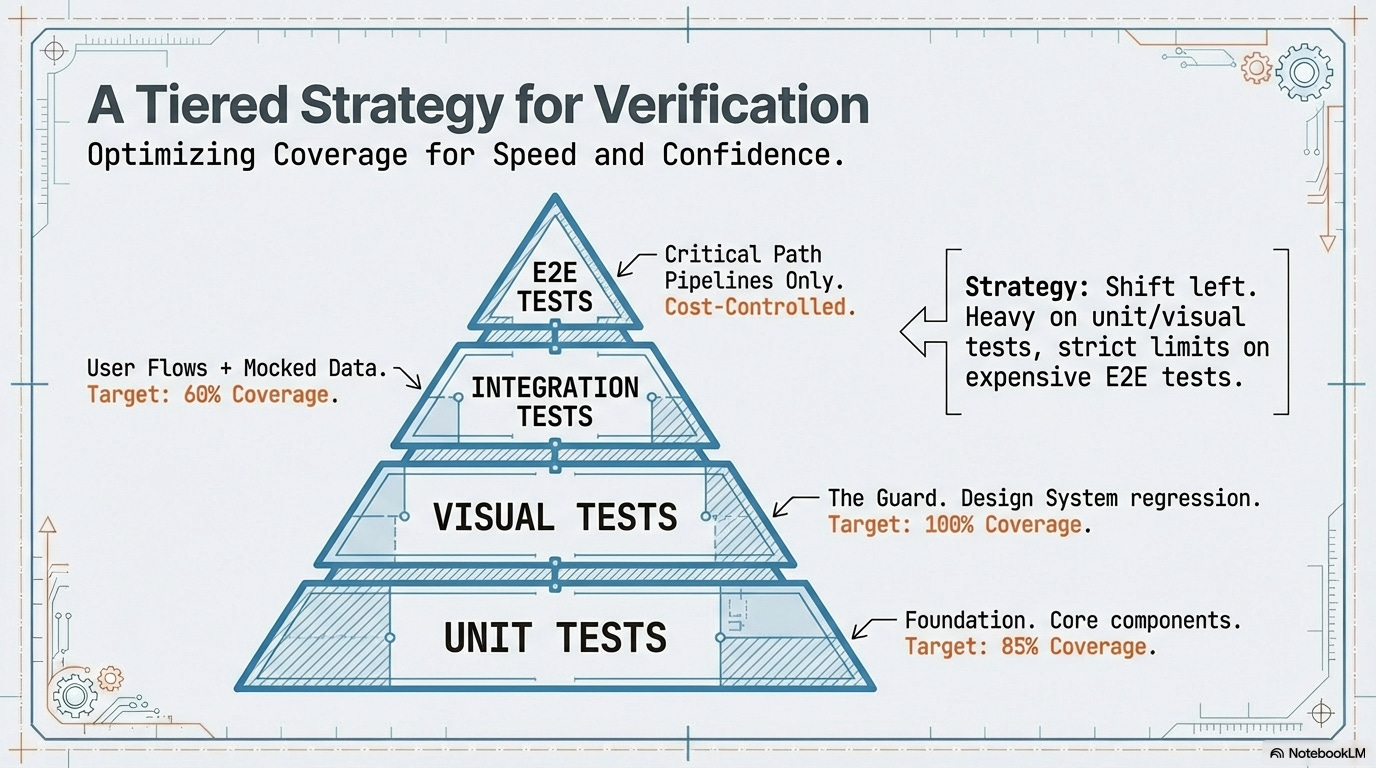

A. Tiered Testing Strategy

All development work must adhere to the following tiers, ensuring coverage is concentrated at the fastest, most isolated levels:

Unit Tests (Foundation):

Mandate: Mandatory for all core components, utility functions, and custom hooks.

Goal: Ensures business logic and isolated component rendering fidelity are stable.

Tooling: Jest/Vitest for execution, React Testing Library (RTL).

Visual and UI Tests (The Bulk):

Mandate: Automated visual regression tools are mandatory for the Design System components and all major page templates.

Goal: Ensures adherence to the UI Contract and catches unintentional stylistic or layout changes across browsers and devices.

Tooling: Chromatic or Percy (or equivalent visual regression tools).

Integration Tests (Flows):

Mandate: Focus on mocking entire user journeys and navigation paths between pages.

Goal: Ensures the integration logic (e.g., state management, event handlers) works correctly, utilizing the mocked schema defined by the Data Contract without reliance on a live backend.

Tooling: Jest/Vitest/RTL, Playwright.

E2E Limitation (Critical Path Pipelines):

Focus: E2E tests must be executed sparingly and limited to the Critical Path Pipelines (CPPs) only (revenue-driving or multi-system workflows).

Tooling SLO: The running time of the E2E suite must be explicitly budgeted within the Tooling Performance Contract SLO, preventing testing costs from growing linearly with feature count.

Tooling: Cypress or Playwright, strictly limited to CPPs.

B. Enforcement and Governance

Definition of Done (DoD) Compliance: Every pull request (PR) must include mandatory artifacts to prove contract compliance before merging. Failure to meet these requirements results in automatic CI failure and PR blocking.

Mandatory Test Coverage Thresholds: The following minimum coverage percentages are required across all Hypercube repositories:

Unit Test Coverage: Leaning into 85% gradually.

Integration Test Coverage: Leaning into 60% gradually.

Visual Test Coverage: 100% coverage for all Design System page Layouts templates.

Coupling with Runtime Efficacy: This contract is coupled with mandatory Service Level Objectives (SLOs) to ensure system stability:

Release Candidate SLO: 99.9% test pass rate required in the staging environment before promotion to production.

Defect Management SLOs: Adherence to predefined metrics for defect resolution time (MTTR) and re-occurrence rates.

3. Continuous Delivery (CD) Enforcement Mechanisms)

The CD enforcement mechanisms ensure that the artifact resulting from CI is promoted through environments (staging, production) in a safe, controlled, and autonomous manner, adhering to the blast radius constraints defined in the Infrastructure Contract (3.4) and the quality requirements of the Quality & Verification Contract (3.5).

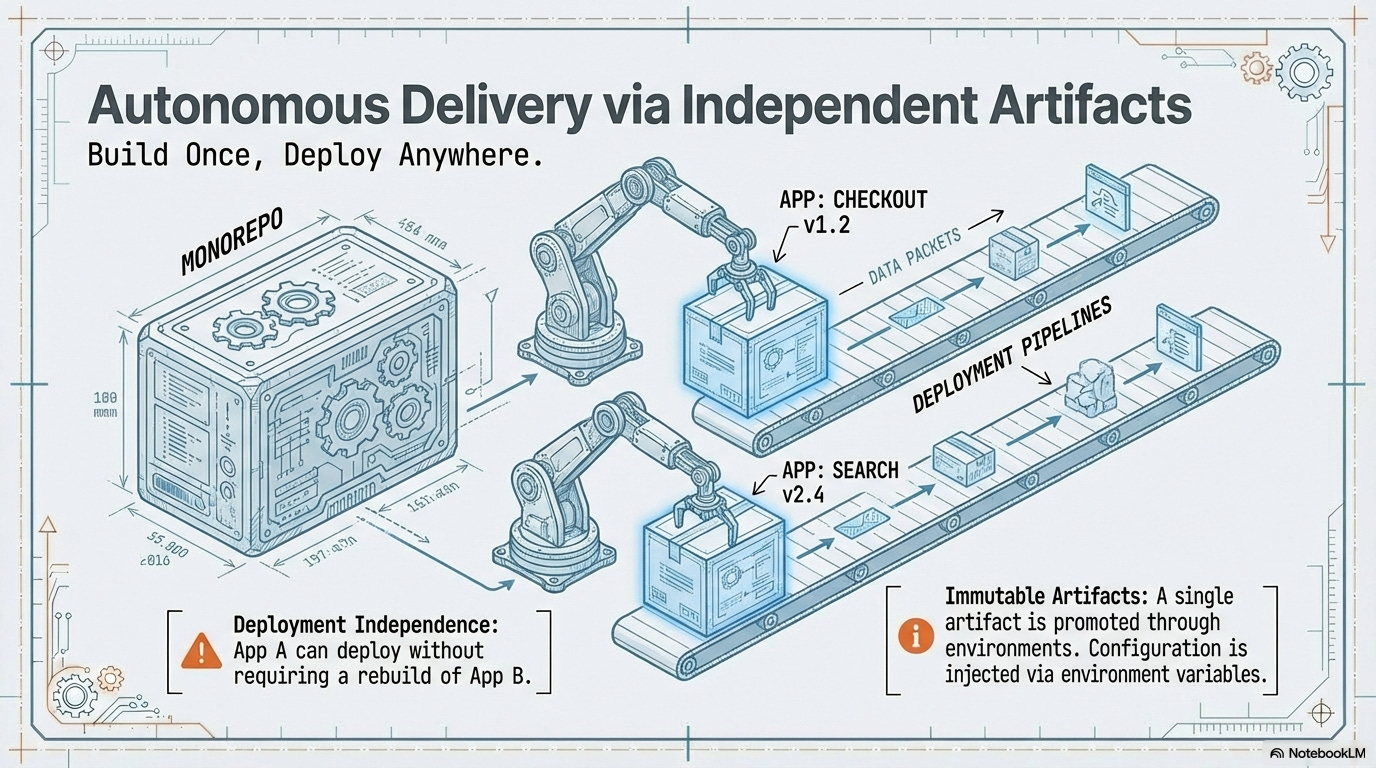

1. Artifact Preparation and Deployment Independence (Autonomy) 📦

This phase ensures feature teams have autonomy by leveraging the Monorepo’s intelligence to build and deploy only the code that has changed.

Deployment Independence via Artifacts: The Monorepo, through its graph analysis capabilities, serves as the mechanism for achieving organizational autonomy without sacrificing technical cohesion. It ensures that a change to one feature (e.g., App A: Checkout Flow) does not require rebuilding or redeploying an unrelated feature (e.g., App B: Image Service).

Detailed Steps for Deployment Independence:

Identify Affected Projects: The Monorepo task runner (e.g., Nx, Turborepo) uses its Affected Graph Analysis to precisely determine which application packages have changed since the last successful deployment.

Isolated Build: Execute the dedicated production build target only for each identified affected application.

Artifact Generation: A unique, versioned deployment artifact (e.g., a Docker image, Next.js build folder) is created for only that application.

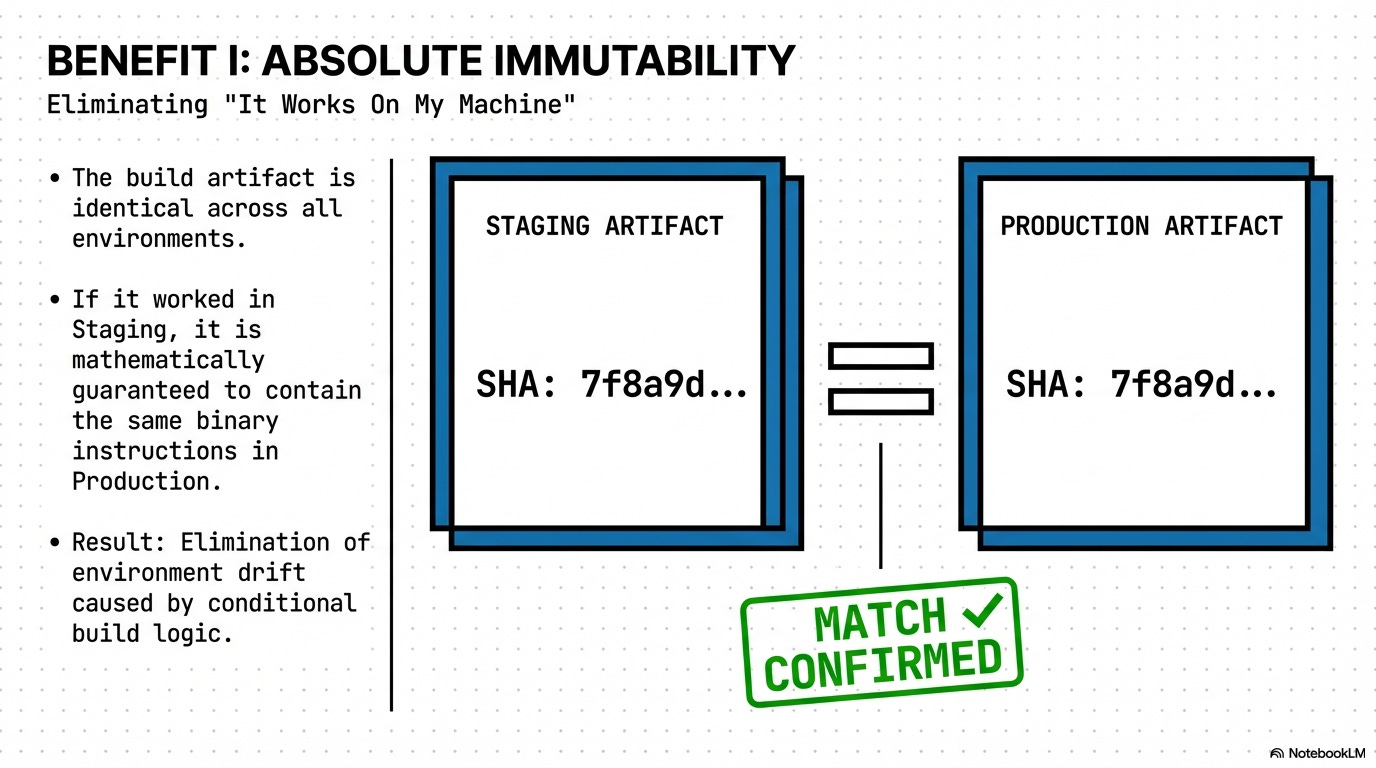

Artifact Upload: The artifact is uploaded to a central Artifact Registry, ensuring the principle of a single, immutable artifact that moves across all environments.

2. Release Gating and SLO Check (The Safety Net) 🚦

This phase provides a hard, data-driven barrier against regressions by validating the quality of the deployed artifact in staging before it reaches production.

Release Gating & SLO Check: Before any affected artifact is promoted from staging to production, the CD pipeline must poll the results of the Quality & Verification Contract. This provides a hard, data-driven barrier against regressions.

Enforcement Example: A Feature Team deploys

app-checkout:v1.2.3to Staging. The deployment pauses and waits for post-deployment verification checks (smoke tests, E2E critical path tests) to run.

Detailed Steps for Release Gating:

Staging Promotion: The identified artifact is initially deployed to the isolated staging environment for active verification.

Wait for Verification: The CD pipeline pauses and monitors the status of post-deployment verification checks (smoke tests, E2E Critical Path tests).

SLO Evaluation: The pipeline retrieves the

TEST_PASS_RATEmetric from the integrated test result APIs.Hard Gate Enforcement:

Success: If the

TEST_PASS_RATEis the Release Candidate SLO (99.9%), the artifact is automatically promoted to production.Failure: If the

TEST_PASS_RATEbreaches the SLO, the CD pipeline is automatically halted. An urgent P1 alert is sent, preventing the unstable artifact from reaching users.

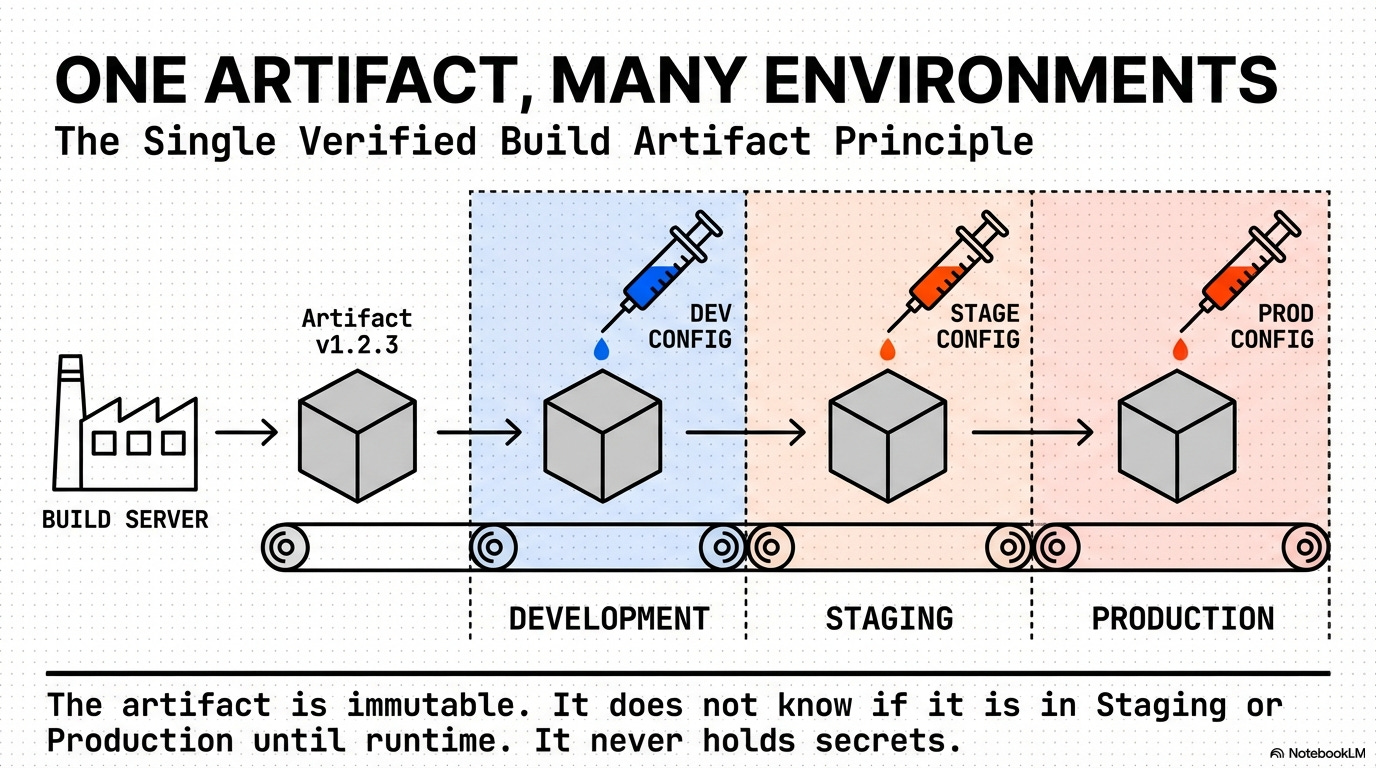

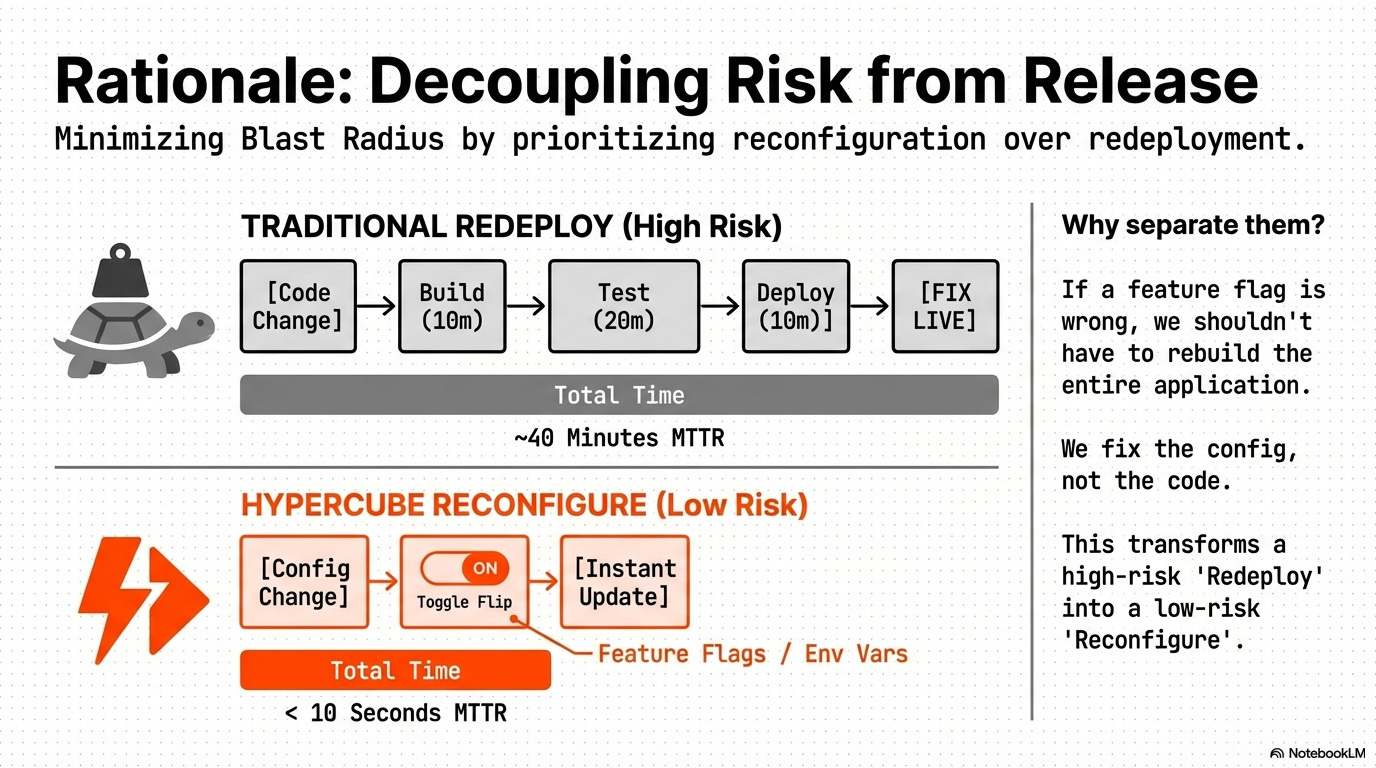

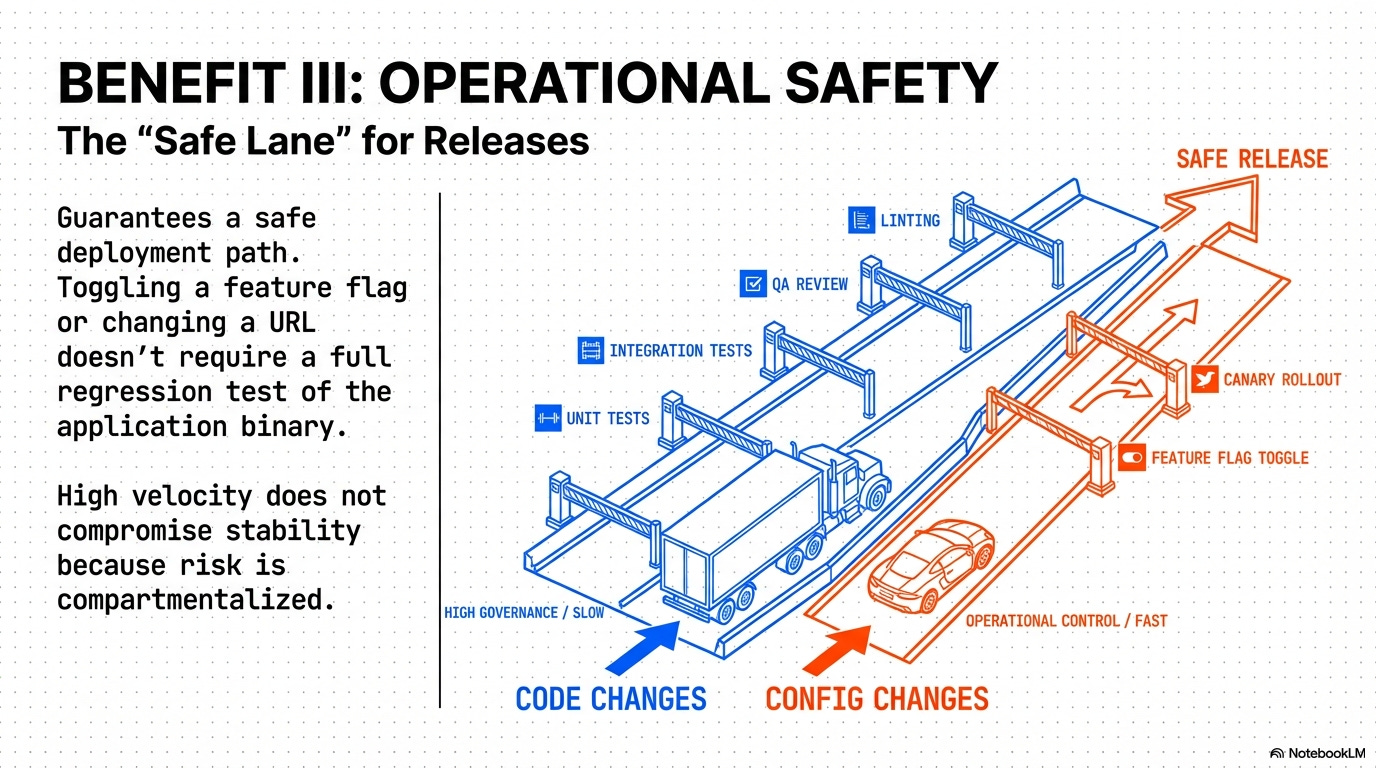

3. Configuration Management and Blast Radius Control 🛡️

This rule enforces the separation of configuration from the code artifact, minimizing the blast radius of runtime changes.

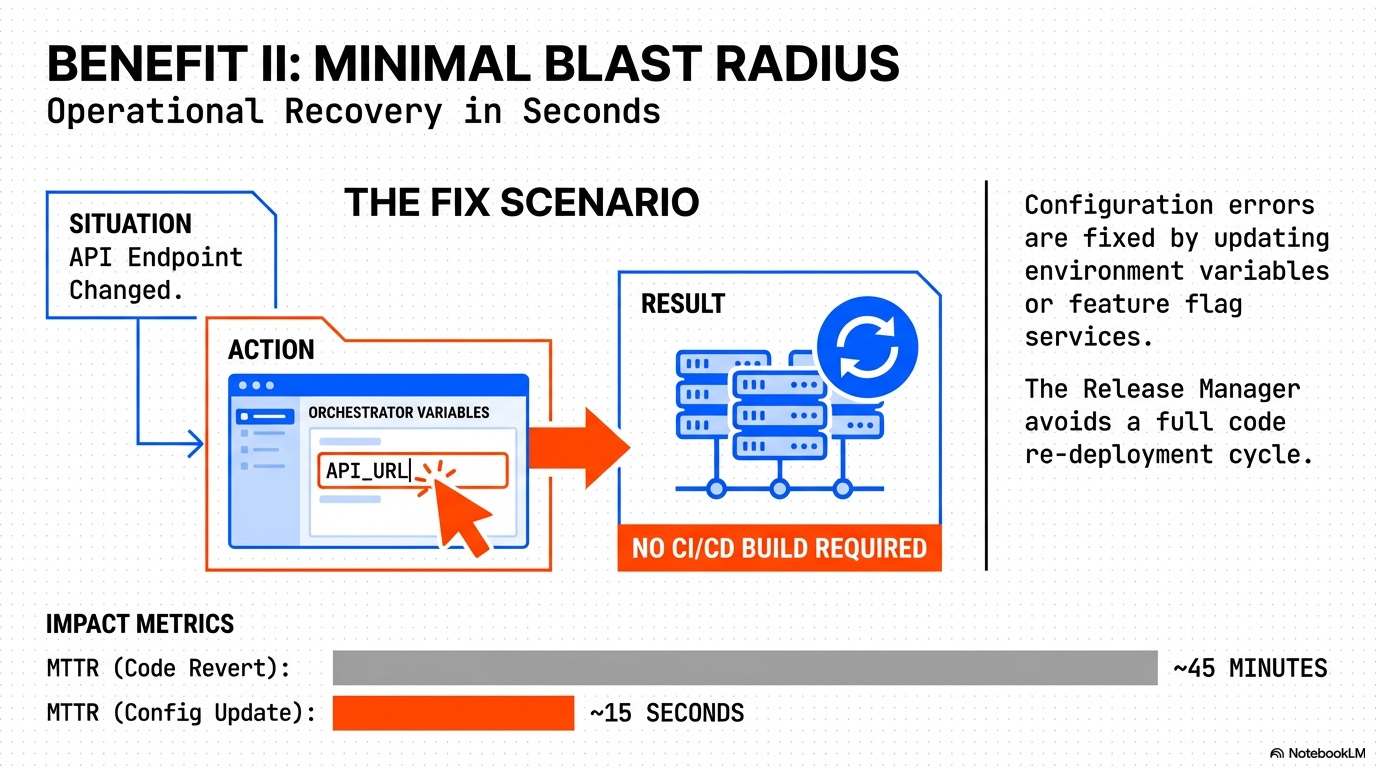

Configuration Management and Blast Radius Control: This rule enforces the separation of build and runtime configuration, ensuring that a single, verified build artifact can be deployed to development, staging, and production environments without modification.

Rationale: This minimizes the deployment blast radius because a configuration error is fixed via a separate, low-impact deployment step (e.g., updating a Feature Flag service), avoiding a full code redeployment.

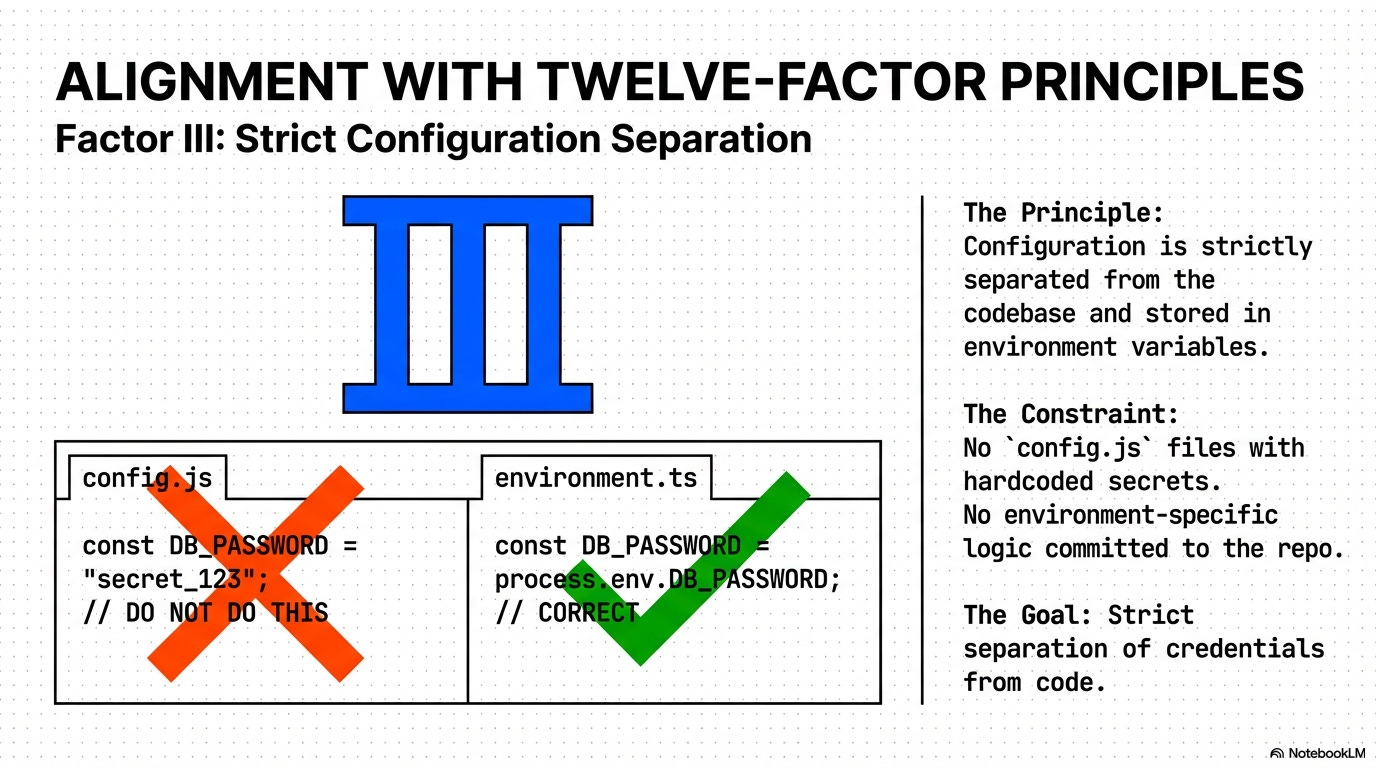

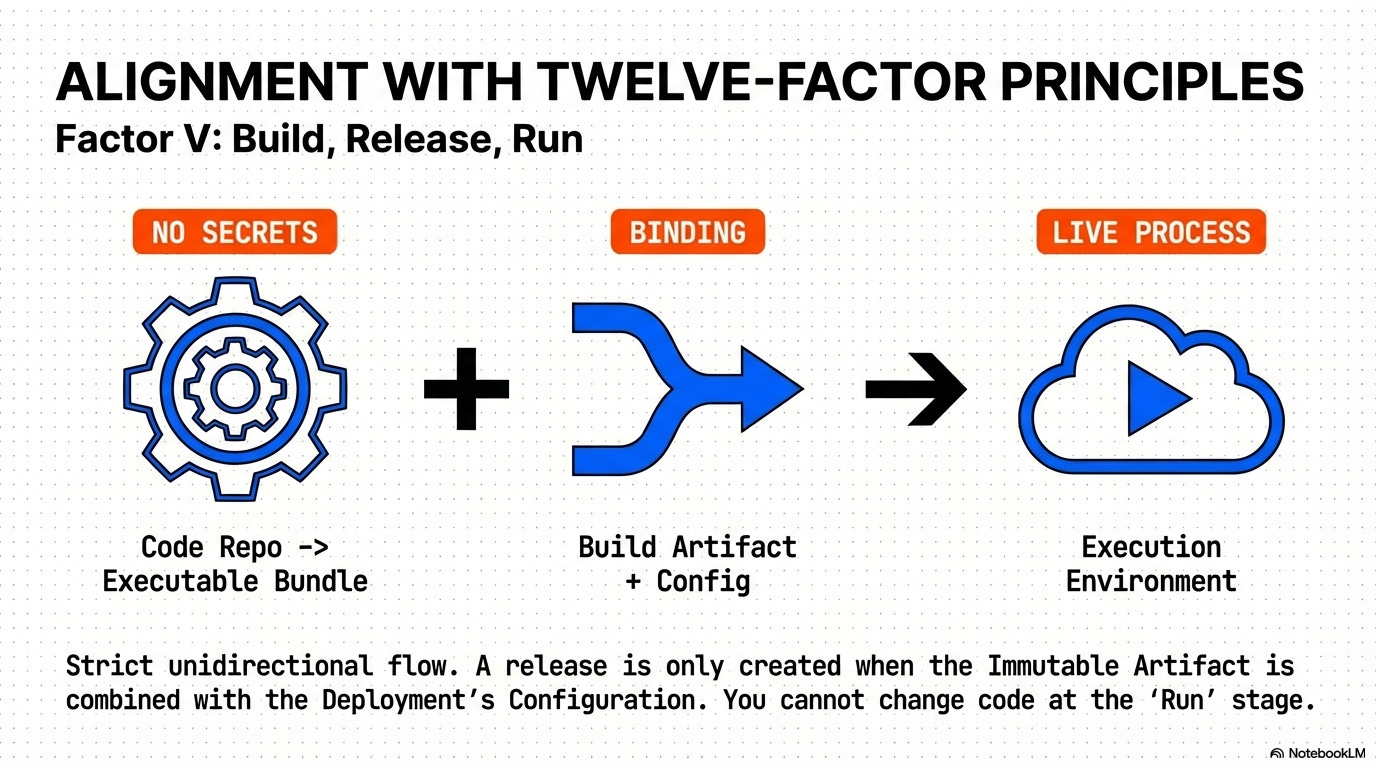

Enforcement of Twelve-Factor App Principles: The Infrastructure Contract directly enforces these core principles:

Factor III: Config: Configuration is strictly separated from the codebase and stored in environment variables or external services.

Factor V: Build, Release, Run: A single, immutable build artifact is created once and moved across environments (Build $\to$ Release $\to$ Run), never holding environment-specific configuration or secrets.

Resulting Benefits:

Immutability: The build artifact is identical across all environments.

Low Blast Radius: Configuration errors can be fixed by updating environment variables/services, allowing the Release Manager to avoid a full code re-deployment.

Safety: Guarantees a safe and low-blast-radius deployment path for configuration changes.

Tooling: Environment Variables, Feature Flag Services (LaunchDarkly, Split.io), Configuration as Code (Terraform, Pulumi).

Chapter 4: Deployment Strategy Enforcement: Governing the Strangler Migration

This chapter mandates the safe, controlled, and data-driven process for large-scale transitions using the Strangler Pattern, enforced by the Edge Gateway.

4.1. The Gateway as Authority: Migration Map Governance

Infrastructure Contract: Governing the Gateway and Migration Map: The contract must include a manifest—the Migration Map—that explicitly controls the Edge Routing Gateway’s configuration (URL Path, Target Artifact, Traffic Status).

URL Path Target Deployment Artifact Infrastructure Status Migration Status /home new-app-v2.3.4 (from Monorepo) 100% Traffic STRANGLED (New Code is Live) /settings legacy-monolith-v1.0.0 See Segmentation Policy IN PROGRESS (A/B testing running)

Enforcement: The build system (CI) must ensure the Gateway’s configuration artifact (e.g., Terraform or Pulumi file) reflects the approved state of the Migration Map. Changes to this map are high-risk and require special sign-off.

4.2. User Segmentation and DORA-Driven Rollout

Segmentation Criteria (Business Contract): Defines the exact rules for dividing users based on business goals, technical risk, or feature targeting (e.g., Canary users, Geo-location).

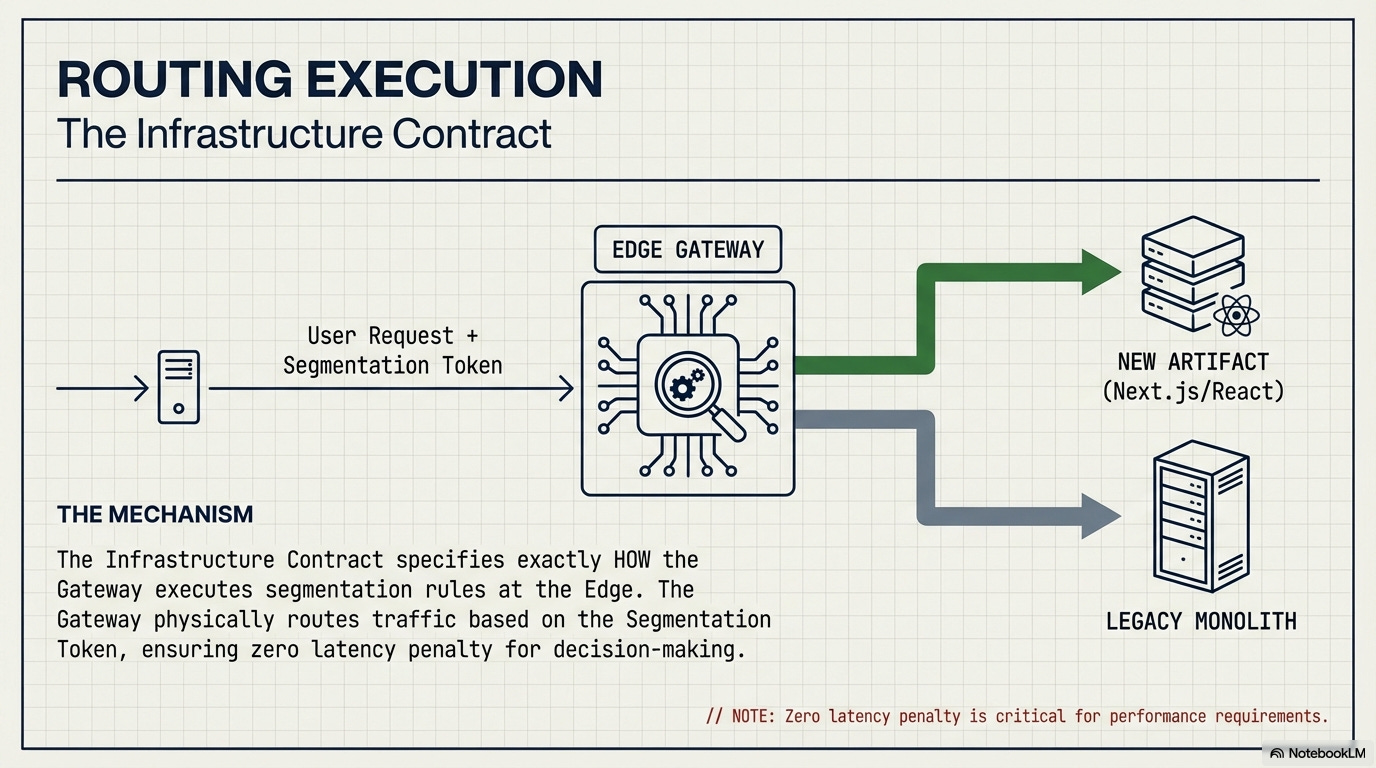

Routing Execution (Infrastructure Contract): The Infrastructure Contract specifies how the Gateway executes these rules at the Edge for maximum performance and security.

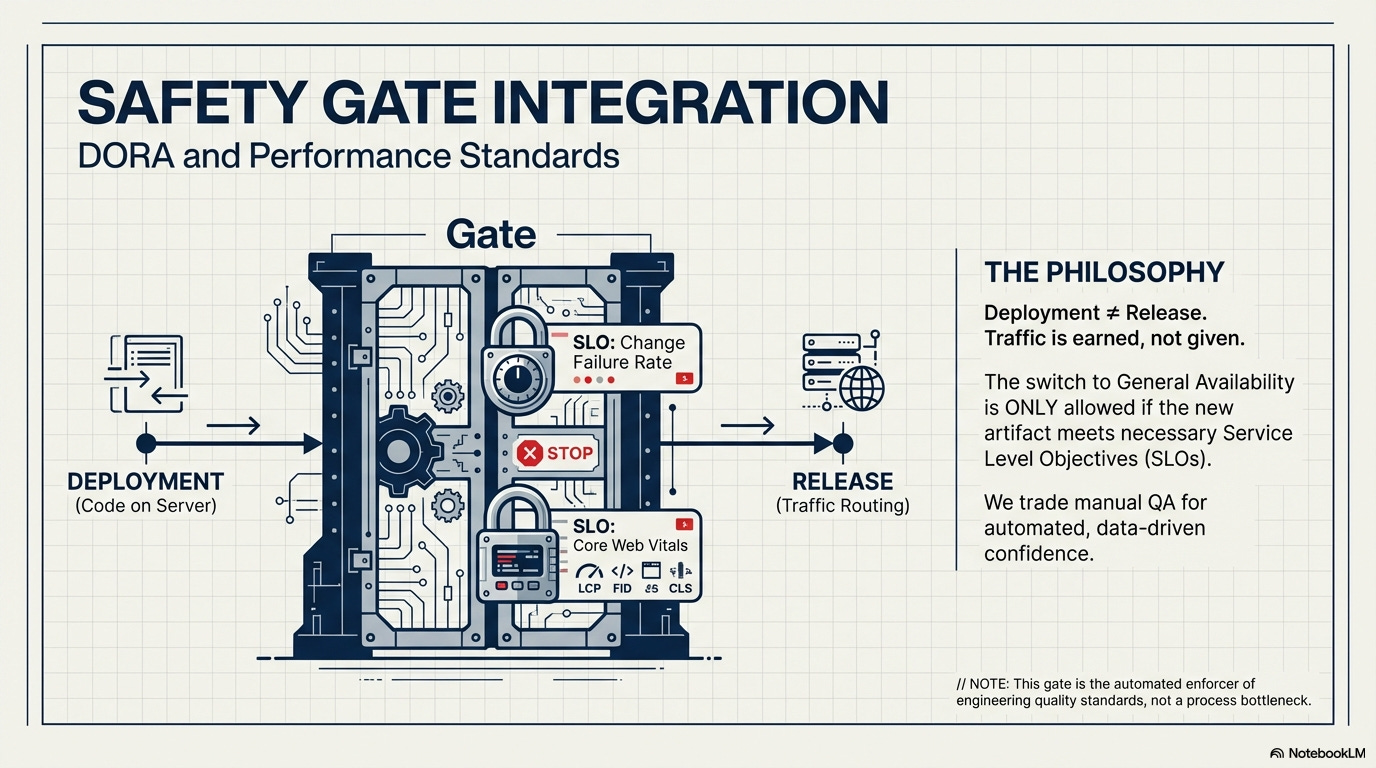

Safety Gate Integration (DORA and Performance): The switch to general availability is only allowed if the new artifact meets the necessary SLOs.

Change Failure Rate (CFR) Check (DORA Metric): If the new segment’s Error Rate exceeds the legacy app’s CFR, the Gateway is instructed to automatically switch 100% of the traffic back to the stable legacy monolith. This is the primary MTTR mechanism.

Performance Gate (CWV Metric): The Core Web Vitals (CWV) SLO acts as a hard blocker. The new app’s LCP (as reported by RUM) must be equal to or better than the legacy app’s LCP before advancing traffic past the initial segment.

4.3. Backend Contract and Decoupling (Strangler Safety) 🔗

Mandatory Adapter Contract : The Migration Map must explicitly note the existence of a mandatory Anti-Corruption Layer (ACL) or Adapter Contract between the new frontend artifacts and the legacy monolith’s APIs. This layer ensures the new frontend always receives the schema it expects, preventing failure due to backward-incompatible legacy API responses.

Rationale: Formalizes the decoupling of the new client from the old server and protects against inevitable schema drift in the monolith.

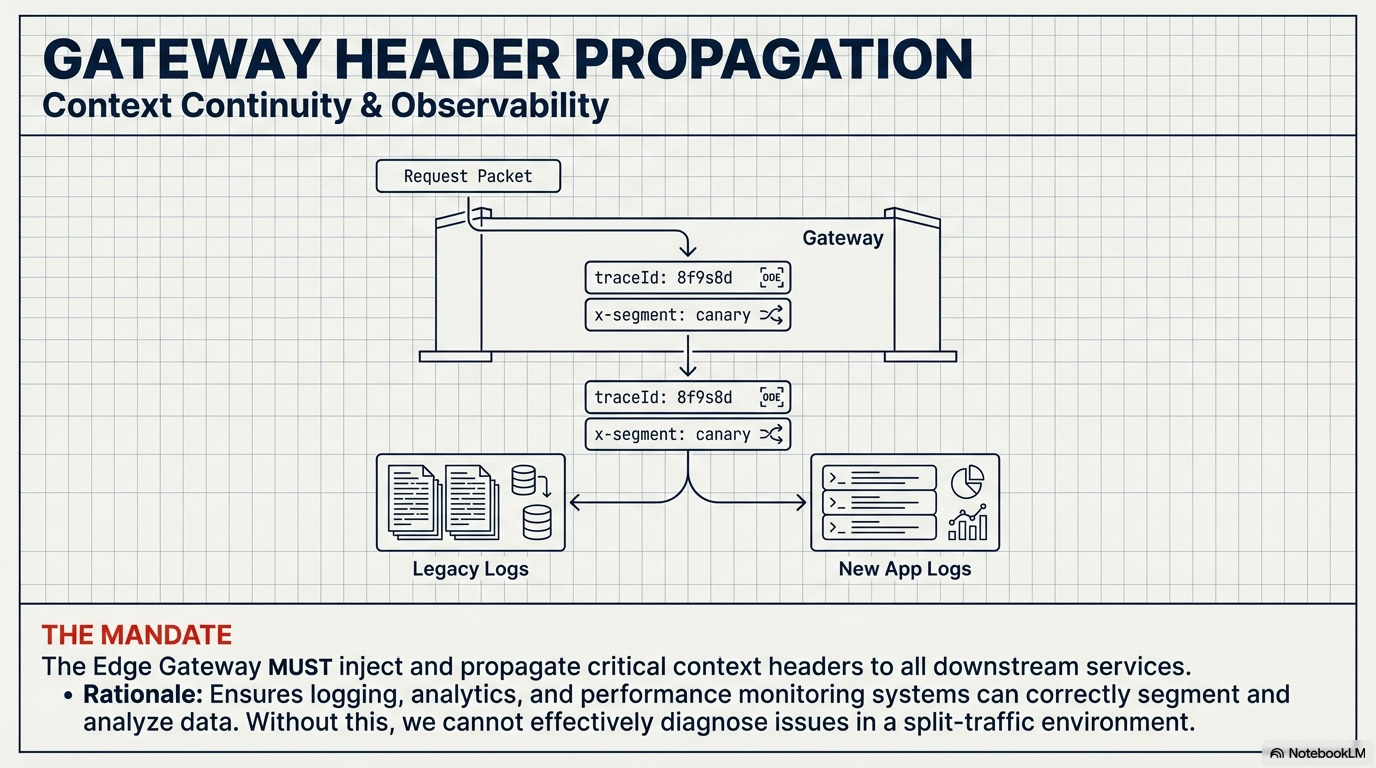

Gateway Header Propagation Contract: The Edge Gateway must be required to consistently inject and propagate critical context headers (like the User Segmentation Token and the mandated

traceId) to all downstream services (new app and monolith alike).

Rationale: Ensures logging, analytics, and performance monitoring systems can correctly segment and analyze data based on the deployment version.

4.4. Client-Side Asset Management (Cache Control)

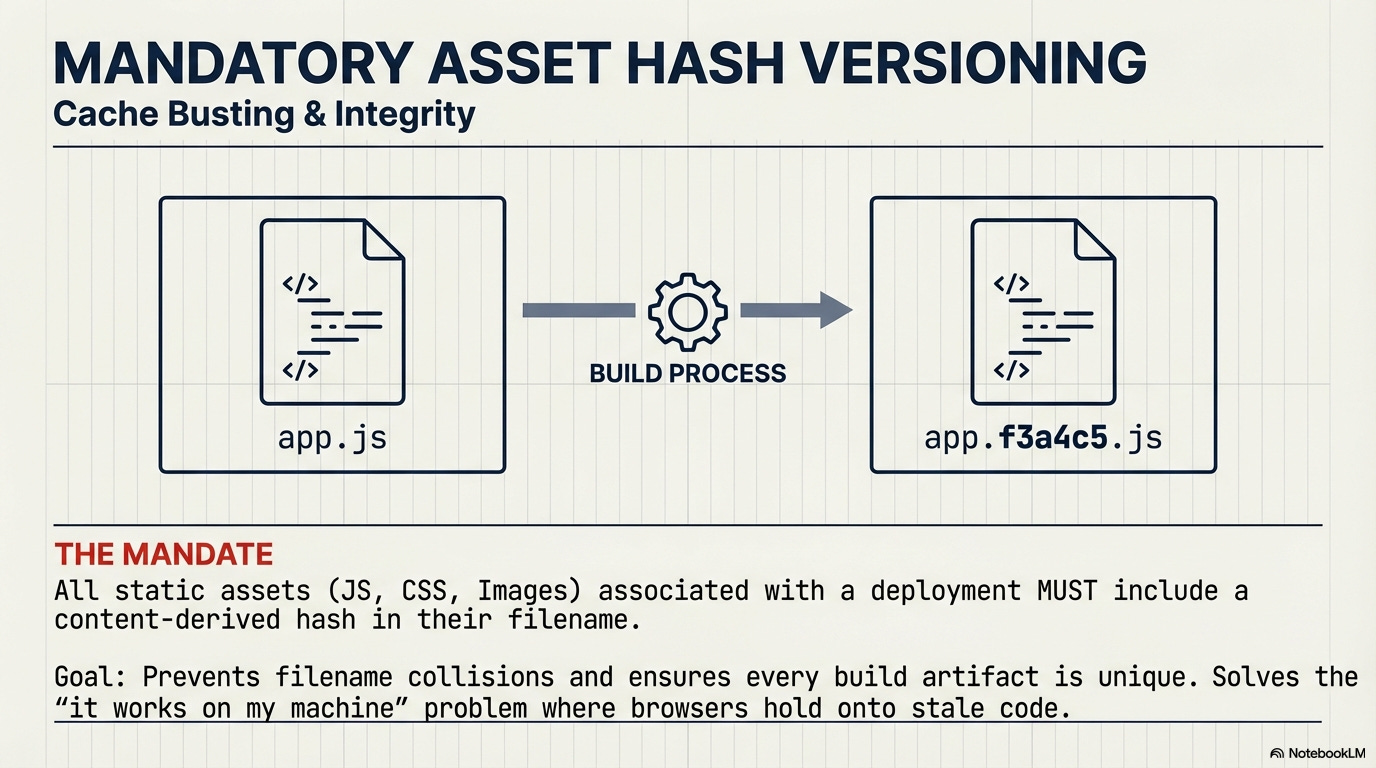

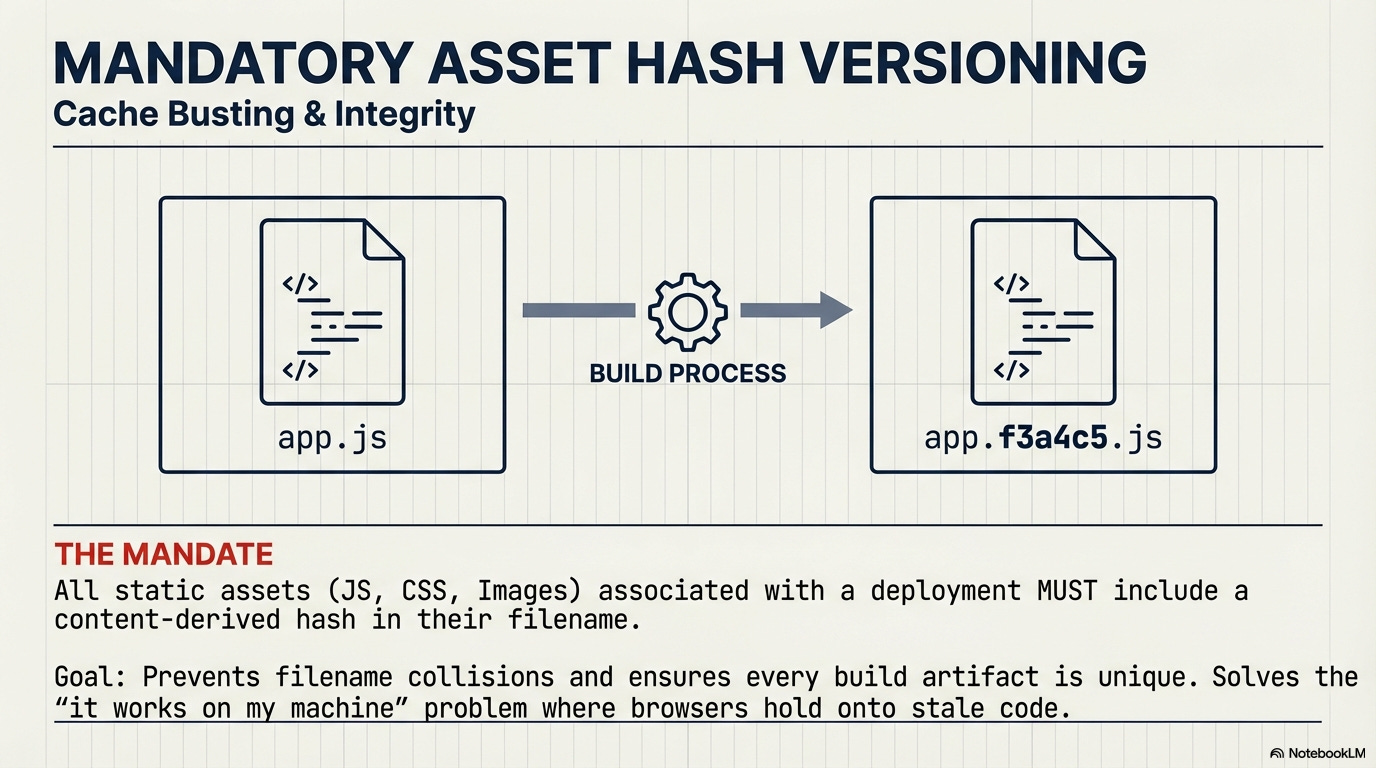

Mandatory Asset Hash Versioning and Cache Busting: All static assets (JS, CSS, Images) associated with a deployment artifact must be served with a content-derived hash in their filename (e.g.,

app.f3a4c5.js).

Enforcement of Dual Caching Policy: The build system (CI) must enforce two policies simultaneously:

A zero-caching policy for HTML/main entry points (

Cache-Control: no-cache) to ensure the client always fetches the latest version of the asset manifest.An immutable, long-term caching policy for all hashed assets (

Cache-Control: max-age=31536000, immutable).

Rationale: This critical dual strategy prevents the client from experiencing mixed asset versions (e.g., new HTML trying to load old CSS from cache) when routing is switched by the Gateway.

4.5. Operational Safety and Rollback Guarantees 🚨

Formal Emergency Rollback Protocol: The infrastructure must guarantee that the Release Manager can trigger an immediate one-click traffic switch to the Last Known Good Artifact (LKGA), bypassing all SLO checks and automated pipelines.

Rationale: Provides a human-triggered safety net for unforeseen P0 scenarios, guaranteed by infrastructure, not just automation.

Feature Flag Integration for New Components: All net new features introduced within the scope of the Strangler artifact must be initially shielded by a Feature Flag controlled by the same configuration management system (3.3).

Rationale: Provides a second layer of defense. If a new feature causes a runtime error, the team can disable only that feature via the flag without rolling back the entire artifact, dramatically reducing the blast radius and MTTR.

Chapter 5. The Manifesto Conclusion: Why We Must Govern the Hypercube

When you enforce the five Technical Contracts, implement the two Operational Contracts, manage their lifecycle, and leverage Monorepo tooling for rapid deployment, you have achieved:

High Performance (low latency, minimal JS payload).

Organizational Autonomy (decoupled teams, independent deployments).

High Velocity (fast, isolated deployments, Tooling SLOs enforced).

Fiscal Discipline (organizational debt quantified and budgeted).

You accomplished all this with a single, well-structured, fast application. What extra, necessary technical problem you have for a Micro Frontend architecture to solve here? Absolutely zero.

The Cross-Stack Mandate: Why Backend Must Comply

The Hypercube is an organizational and communication strategy first, and a technical enforcement mechanism second. While Sections V and VI focus on client-side implementation (Monorepo and Islands), the governance model is fundamentally cross-stack.

The Data Contract (3.1) and the Infrastructure Contract (3.4) are the primary boundary layers that unify the entire engineering organization. Whether the backend uses planetary-scale microservices, event-driven architecture, or strict Test-Driven Development (TDD) principles is an implementation detail for the backend team’s autonomy.

However, the backend’s external facing adherence to the Data Quality SLOs, Latency SLOs, Distributed Tracing standards, and the Sunset of deprecated APIs must be governed by the same organizational rules, ensuring complete cohesion from the database to the DOM. Organizational boundaries (Conway’s Law) and communication contracts must be synchronized across the entire system.

1. Visualizing the Impact

The impact of the Hypercube is the synergy created by the four axes interlocking to enforce the Seven Contracts within the high-performance Monorepo architecture. The result is a system that remains unified and fast while granting organizational independence.

The Visual Impact is the Shift from Fragmentation to Cohesion:

From Distributed Runtime (MFE) to Centralized Monorepo: The Monorepo becomes the physical vessel, hosting the high-performance Island Architecture (Section VI). All checks are performed at the safest, cheapest place: compile-time.

Contracts as the Glue: The seven contracts (Data, UI, Business, etc.) span the axes, translating the organizational ‘Why’ into the technical ‘What’ that the Monorepo enforces.

The Sunset Gate: The TTL Governance (Section 6.2.1) is the critical gate on the

Contract Lifecycleaxis, ensuring the system does not accumulate permanent debt, thereby sustaining theRuntime Efficacy(Cost and Performance).

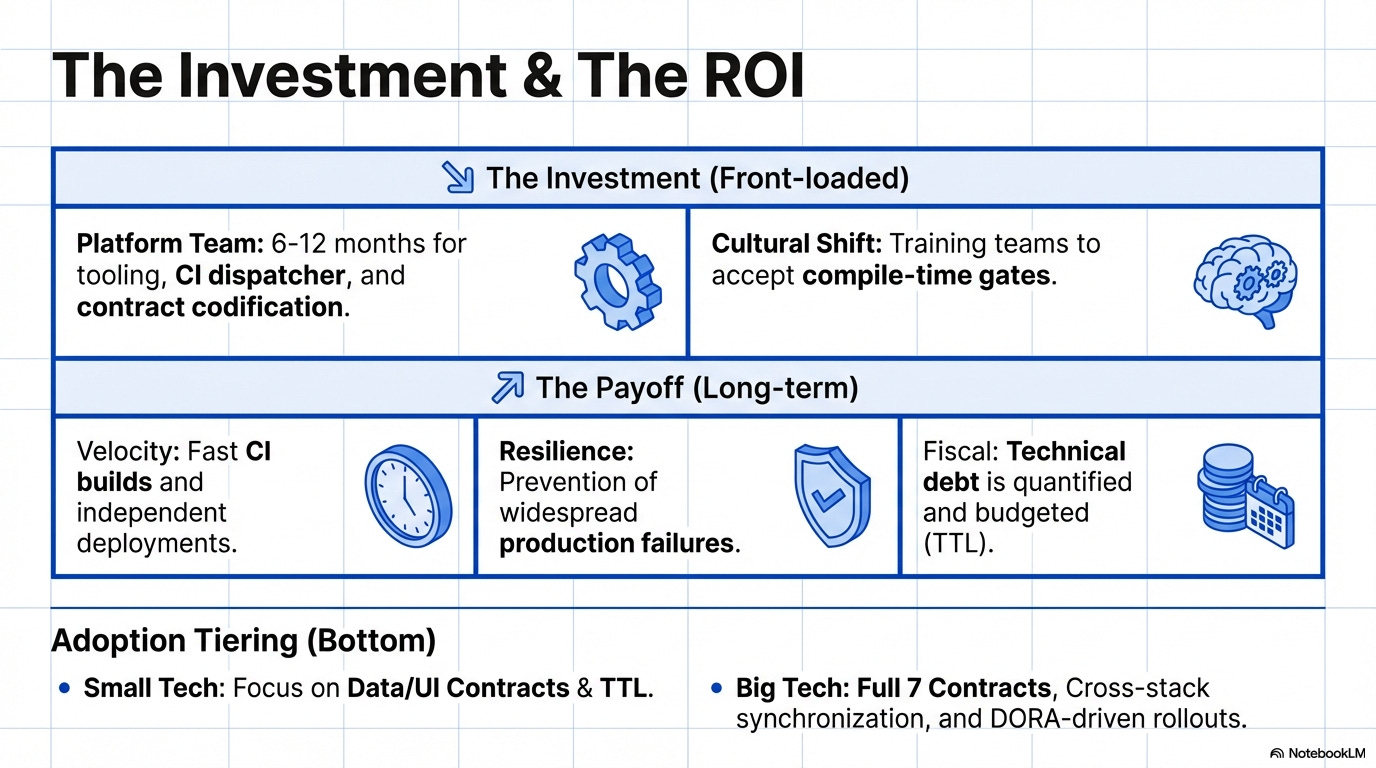

2. Cost and Time Assessment for Governance Implementation

The Hypercube is an investment in Developer Experience (DevEx) and Technical Resilience. The cost is front-loaded in engineering time, but the Return on Investment (ROI) is realized through the avoidance of much higher long-term operational costs.

2.1. Initial Investment (6–12 Months: Platform Team Effort)

Tooling & Infrastructure (Infra Contract)

Description: * Implementing monorepo orchestration (Nx/Turborepo) with affected graph analysis.

Developing CI Dispatcher logic and automated dependency graph checks.

Setting up automated client/SDK generation via OpenAPI or GraphQL schemas.

Estimated Time: 4–6 Months.

Primary Cost Reduction / ROI: Drastic reduction in CI/CD execution time (Tooling SLO) and the elimination of manual, error-prone API integration.

Contract Codification (Data, UI, Quality)

Description: * Establishing core standards: Design Tokens and the

CODEOWNERSfile.Setting initial SLOs (LCP, Error Rate).

Deploying a mandatory standardized Error Reporting Utility.

Estimated Time: 3 Months.

Primary Cost Reduction / ROI: Total elimination of UI drift and a significant reduction in post-release defects (Defect Mgmt SLO).

Knowledge/Debt Governance (Operational Layers)

Description: * Formalizing the Architecture Decision Record (ADR) process.

Integrating TTL/Sunset tracking into the build process to monitor Experimental and Adapter code.

Estimated Time: 2 Months.

Primary Cost Reduction / ROI: Prevents the accumulation of Shadow IT and provides a mechanism to quantify and manage technical debt expenses.

Cultural Shift (Management & Alignment)

Description: * Team training and documentation for strict compile-time gates (e.g., CDCT).

Enforcing mandatory JSDoc/Documentation for all public-facing contracts.

Estimated Time: Ongoing (High Effort).

Primary Cost Reduction / ROI: Mitigates communication failures and strengthens team alignment by solving for Conway’s Law.

Cost: The primary cost is engineer salary for the dedicated Platform/DevEx team responsible for building the enforcement tools. This investment is non-negotiable for organizations above 50 engineers; without it, they are simply paying the tax of slow velocity, high incidents, and constant context fragmentation.

2.2. Long-Term Maintenance and ROI

Once established, the ongoing maintenance cost is relatively low (20% of the initial investment annually) and is absorbed by the platform team.

The continuous ROI is critical: The cost of implementing the Hypercube is demonstrably lower than the operational cost of not implementing it. The system prevents high-cost events like:

A major performance regression that impacts conversion (CWV SLO).

A widespread production failure due to an integration mismatch (CDCT failure).

Stagnant developer velocity due to a 45-minute CI build time (Tooling SLO).

3. Gradual Adoption Strategy by Company Size

The article provides a solid framework, but we can refine the initial steps for each stage to ensure the smallest firms gain maximum velocity with minimal bureaucracy.

Small Tech (1–50 Engineers)

Core Focus: Velocity & Structure. Maximize iteration speed while preventing early-stage technical debt.

Mandatory Contracts:

Data Contract: Strict use of TypeScript and codegen for type safety.

UI Contract: Basic design tokens to maintain visual consistency.

Experimental Contract (TTL): A hard mandate for all POCs to ensure they are temporary.

Adoption Steps (6–12 Months):

Start ADRs: Document only high-impact architectural decisions.

Define 1 SLO: Focus purely on user-centric metrics like LCP (Largest Contentful Paint).

De-prioritize Fiscal Discipline: Avoid cost-tagging overhead until the product matures.

Medium Tech (100–1,000 Engineers)

Core Focus: Governance & Resilience. Prevent organizational friction and complexity drift from fracturing the system.

Mandatory Contracts:

The Full Seven: Implement all five technical and two operational contracts.

Tooling SLO: DevEx (Developer Experience) must be a P1 priority to maintain speed.

Knowledge Contract: Mandate ADRs (Architecture Decision Records) for every contract change.

Adoption Steps (6–12 Months):

Full CDCT: Achieve 100% Consumer-Driven Contract Testing (CDCT) coverage.

Implement Fiscal Discipline: Start mandatory cloud resource tagging for granular cost tracking.

Formalize Veto Authority: Define a “break-glass” P0 hotfix bypass process.

Big Tech (1,000+ Engineers)

Core Focus: Integration & Autonomy. Manage extreme scale, multi-vendor integration, and strict regulatory boundaries.

Mandatory Contracts: All contracts are strictly enforced globally across all business units.

Adoption Steps (6–12 Months):

Cross-Stack Synchronization: Align backend SLOs and tracing standards with frontend contracts.

MFE Governance: Use the Hypercube to govern the shared contract registry and observability for Micro-Frontends (MFEs).

Hardened CD Contract: Implement full DORA-driven rollouts and network segmentation for high-risk migrations.

The crucial takeaway is that the Experimental Contract (TTL) is essential at all scales, preventing temporary innovations from silently becoming permanent, unmanaged technical debt.

The pursuit of a near-instantaneous build is not about milliseconds of optimization; it is about eliminating cognitive load and organizational friction.

“The singular truth of Staff Engineering: Your greatest success is achieved when you are no longer needed.

A slow Monorepo demands constant maintenance, manual intervention, and bottlenecked Platform involvement. But when the Tooling Performance Contract is fully enforced—when the build graph is optimized, the cache hit rate is above 90%, and the CI feedback loop is near-instantaneous—you haven’t just optimized code. You have engineered away organizational friction.

You have reduced the team’s cognitive load so profoundly that they can work autonomously, without ever feeling the gravity of the shared platform. When the teams can move fast without needing to call you, you’ve aced the job.”

Stop fighting the Monolith and start mastering the Hypercube. Stop debugging the inevitable runtime failures of a fractured system, and start governing the boundaries of communication with cohesion and authority.

The Hypercube isn’t about code. It’s about creating a system where your engineers can finally sleep through the night, knowing their autonomy is guaranteed by contract, not by prayer.

Suggested Further Reading

This comprehensive list of resources provides the technical, organizational, and theoretical foundation for the concepts explored in The Governance Hypercube framework, complete with direct links for quick access.

I. Foundational Architectural and Organizational Theory

Conway, Melvin E. (1968). How Do Committees Invent? Datamation.

Reference Link: http://www.melconway.com/Home/Conways_Law.html

Context: The seminal paper that defines “Conway’s Law,” which is the organizational bedrock of the Governance Hypercube, explaining how communication structures determine system architecture.

Fowler, Martin. (2014). Microservices: A Definition of This New Architectural Term. MartinFowler.com.

Reference Link: https://martinfowler.com/articles/microservices.html

Context: Establishes the core principles of independence and bounded context that frontend architecture attempts to emulate.

Fowler, Martin & Parsons, James. (2019). Micro Frontends. MartinFowler.com.

Reference Link: https://martinfowler.com/articles/micro-frontends.html

Context: The industry-standard summary and definition of the Micro Frontend pattern, outlining the organizational drivers and common implementation styles.

Fowler, Martin. (2004). Strangler Fig Application (The Strangler Pattern). MartinFowler.com.

Reference Link: https://martinfowler.com/bliki/StranglerFigApplication.html

Context: Defines the architectural pattern for incrementally migrating a monolithic application by wrapping existing functionality with new microservices.

Muller, Michael (Thoughtworks). (2011). Architectural Decision Records (ADRs). GitHub/adr/template.md.

Reference Link:

https://adr.github.io/

Context: The specification for formalizing Architectural Decision Records, which are critical documentation artifacts for the article’s Knowledge Contract.

II. Rendering, Performance, and Modern Patterns

Google. Core Web Vitals (CWV): Google’s Metrics for User Experience. web.dev.

Reference Link: https://web.dev/vitals/

Context: The official source for defining the performance metrics (LCP, INP, CLS) that serve as the fundamental Service Level Objectives (SLOs) for the Runtime Efficacy axis.

Google. Rendering on the Web. web.dev.

Reference Link: https://web.dev/rendering-on-the-web/

Context: Comprehensive explanation of various rendering strategies, including Server-Side Rendering (SSR), which provides the baseline for high-performance content delivery.

Miller, Jason (Google). The Island Architecture: Thinking Beyond Single Page Applications. JasonFormat.com.

Reference Link: https://jasonformat.com/islands-architecture/

Context: Introduces the concept of Island Architecture, a high-performance strategy presented as a strong, non-runtime-composition alternative to traditional Micro Frontends.

III. Governance and Technical Contracts

Google Site Reliability Engineering (SRE) Book. Chapter 3: Service Level Objectives. O’Reilly Media.

Reference Link: https://sre.google/sre-book/service-level-objectives/

Context: Provides the methodology for defining and enforcing SLOs and Error Budgets, forming the foundation for the governance models in the article.

The Apache Software Foundation. Data Quality SLOs: Ensuring Data Reliability. Apache Iceberg Documentation.

Reference Link: https://iceberg.apache.org/docs/latest/data-quality-slo/

Context: Provides a dedicated framework for applying SRE principles to data integrity, directly supporting the enforcement of data freshness, accuracy, and completeness within the Data Contract.

Fowler, Martin & Yip, Llewellyn. (2018). Consumer-Driven Contract Testing (CDCT): Ensuring Team Alignment. MartinFowler.com.

Reference Link: https://martinfowler.com/articles/consumer-driven-contracts.html

Context: Explains the core testing methodology for verifying system integration points, directly supporting the enforcement of the article’s Data Contract.

OpenAPI Initiative. OpenAPI Specification (OAS): API Description Standard. Swagger.io.

Reference Link: https://swagger.io/specification/

Context: The standard for defining and documenting RESTful APIs, which is essential for codifying and validating the schema of the Data Contract.

Product School. The Anatomy of a Product Requirements Document (PRD). Product School Blog.

Reference Link: https://productschool.com/blog/product-management-2/product-requirements-document-prd-anatomy/

Context: Provides a canonical structure and best practices for creating a PRD, the key artifact used to formally document the objectives, features, and success criteria of the Business Contract.

Databricks. Time-To-Live (TTL) Governance. Databricks Glossary.

Reference Link: https://www.databricks.com/glossary/time-to-live-ttl

Context: Explains the technical mechanism and governance policy of Time-To-Live, which is crucial for managing data retention, caching strategies, and ensuring compliance within the Data and Runtime Efficacy Contracts.

IV. UI Structuring and Design Systems

Frost, Brad. Atomic Design Principles: Building Systems with Hierarchy. BradFrost.com.

Reference Link: https://bradfrost.com/blog/post/atomic-design/

Context: The methodology for structuring user interface components into a logical hierarchy (Atoms, Molecules, Organisms), which underpins the development of reusable design systems.

Salesforce. Design Tokens: Managing Design Decisions in Code. Lightning Design System.

Reference Link: https://www.lightningdesignsystem.com/design-tokens/

Context: Explains the practice of using tokenized variables for design properties (color, spacing, typography) to ensure stylistic consistency and support the enforcement of the article’s Business Contract.